Sumo Logic ahead of the pack

Read articleComplete visibility for DevSecOps

Reduce downtime and move from reactive to proactive monitoring.

August 2, 2018

In today’s world, we are building and designing systems at scale like never seen before. Every microservice that we build is either stateful or stateless. And building replicated systems in these states is quite challenging. But before we dig into this more, let’s first look more deeply at these two different states for background and context.

These services are somewhat easy to manage and scaling semantics are somewhat easy when compared to stateful services. We don’t need to worry about nodes going down or service becoming unserviceable because these services are stateless, so one can directly start using another node.

These services are more challenging to manage because they have some state so we need to build these services considering the ramifications of nodes going down and service becoming unserviceable. So essentially, we need to make these services fault tolerant.

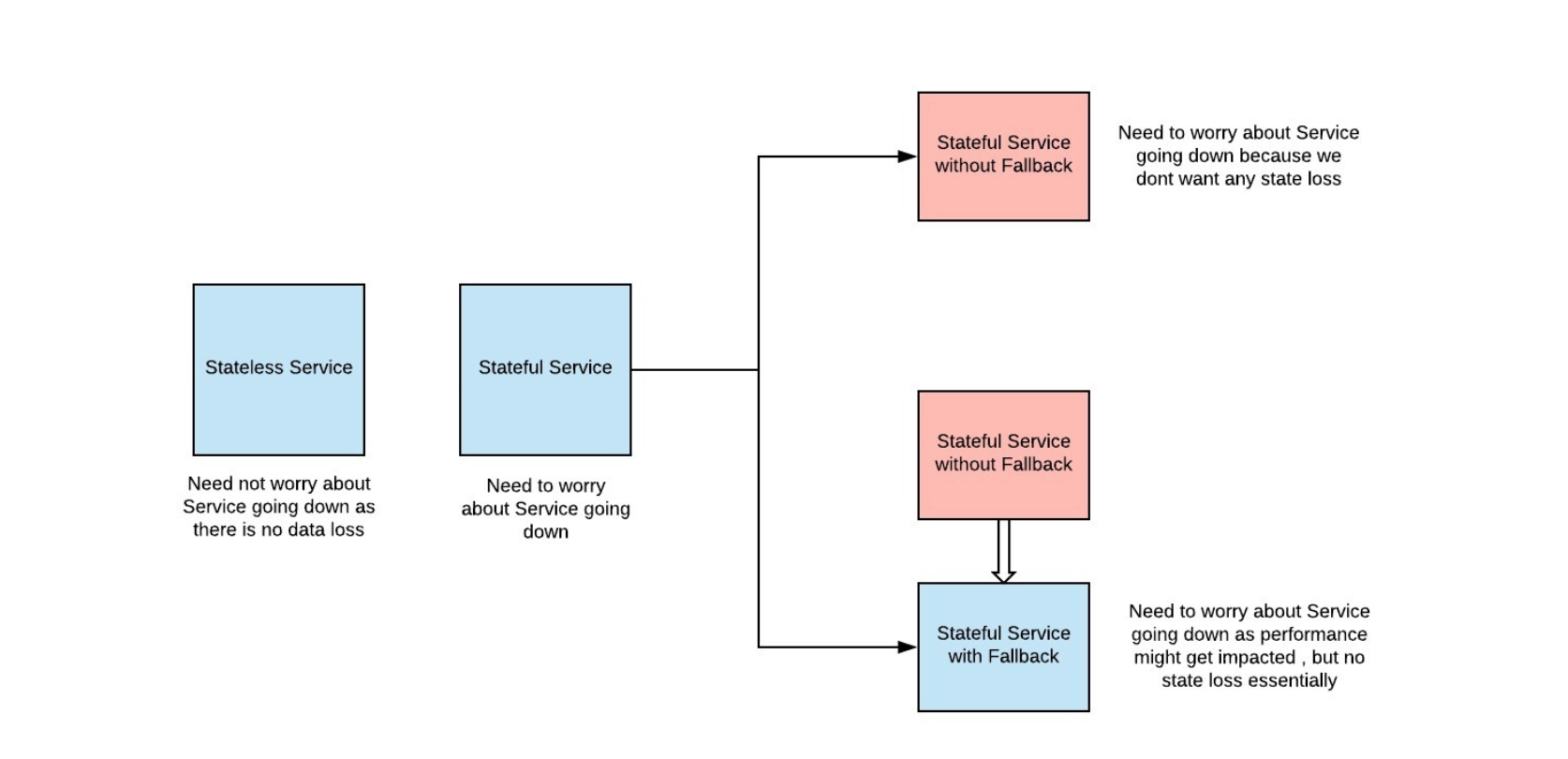

There might be two kinds of stateful services:

Building Fault-tolerant Services

In the context of this blog, we are going to talk about how can we make sure that these stateful services without fallback remain fault tolerant.

Let’s first see how can we make these services fault-tolerant:

The takeaway is that we might need to build our own systems which have to support replication for availability.

As defined in the book “Designing Data Intensive Applications,” replication is defined as keeping a copy of the same data on several different nodes, potentially in different locations. Replication provides redundancy if some nodes are unavailable, the data can still be served from the remaining nodes. Replication can also help improve performance.

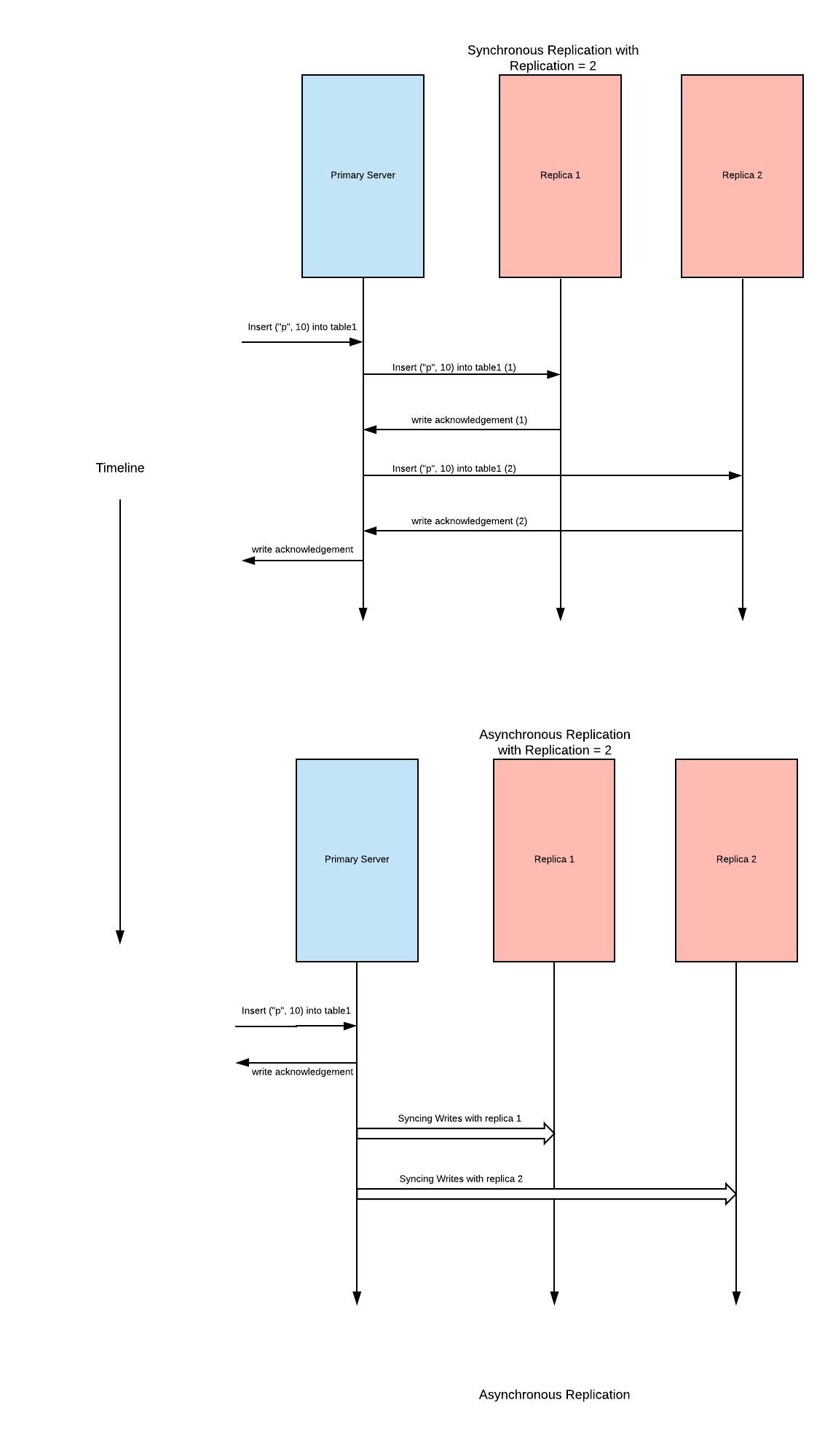

There are two kinds of replication models:

Both of these replication techniques have their downsides. As already mentioned, synchronous replication guarantees no data loss at the expense of higher WRITE latencies and reduced fault-tolerance whereas asynchronous replication provides much better WRITE latencies at the expense of lower number of network calls and batch syncs.

Note: Both of these techniques are not so straightforward to implement and need some deep understanding of the common pitfalls of the replication process in general. See this link for more details.

Apache Kafka is an open source stream processing system that makes it much easier to solve replication issues and I’ll walk you through some use cases. As already told, replication is a challenging problem to implement unless you have had experiences with it beforehand at production scale.

Here are the top three replication challenges:

Kafka helps us solve these three challenges that we will leverage to implement replication for our service.

Note: To fully take advantage of Kafka, you first have to have a basic understanding of Kafka partitions. You can read more here for additional background.

In short, Kafka partitions can be considered as logical entities where producers write data and consumers read data in an orderly fashion and this partition is replicated across different nodes, so you need not worry about the fault tolerance of these partitions. Every data which is ever written to a Kafka partition is written at a particular offset and while reading, consumer specifies the offset from which it wants to consume the data.

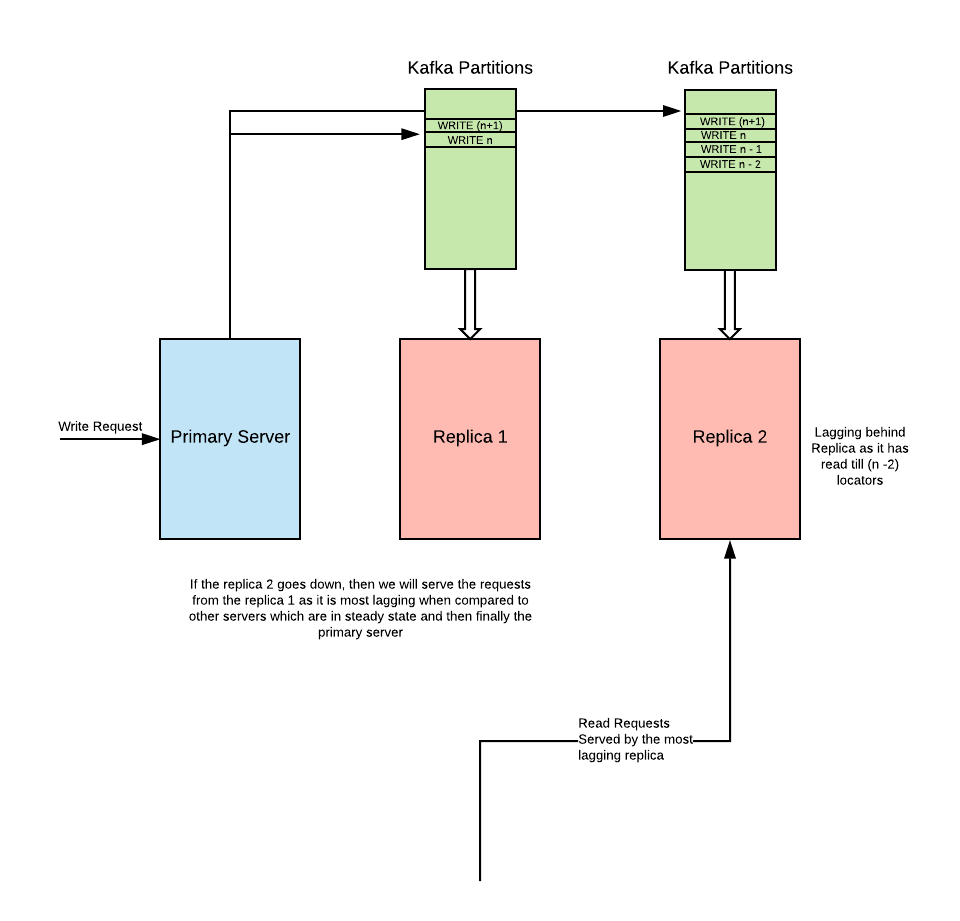

First, we need to make sure that we have one Kafka partition for every replica for our primary server.

Eg. If you want to have two replicas for every primary server, then you need to have two Kafka partitions, one for each of these replicas.

After we have this setup ready, then:

Some of the replicas might be lagging behind but this might be because of some of the other systemic issues (which is not at all under our control) but eventually, all of the replicas will have the same state.

Figure: This diagram shows the interaction between the client and the primary server along with the replicas.

In this design, we need not worry about the nitty gritty of implementing replication because while using Kafka we get this out of the box.

There are a few things which we might need to implement in this design as well:

Now let’s discuss the positive points of this design

Old_Request_Latency = PWT_1 + PWT_2 + ……. + PWT_n

New_Request_Latency = PWT_1 + KWT_2 + …. + KWT_n

where

PWT_1 = Time taken to process request on Node 1

PWT_2 = Time taken to process request on Node 2

and so on

KWT_2 = Time taken to write requst to kafka partition for replica 2

KWT_3 = Time taken to write requst to kafka partition for replica 3

and so on

Old_Request_Latency encapsulated writing requests to all

of the available replicas.

New_Request_Latency includes writing request to one of the

primary servers and making sure the write request is written

on all the concerned partitions

Essentially latencies cannot be compared between these two subsystems, but having said that as there is an extra hop for introducing Kafka, there would be some additional overhead which should be really minimal considering latencies of Kafka.

If one of the replicas is having high latencies at some moment (because of high GC pauses or a disk being slow), then that could increase latencies for the WRITE requests in general. But in our case using Kafka, we can easily get over this issue as we would just be adding the WRITE REQUEST to the REPLICA’S KAFKA partition so it would be the responsibility of the REPLICA to sync itself whenever it has some CPU cycles.

Apart from these pros, there are obviously some cons in this design as well.

Note: Write latencies for smaller payloads would increase by a higher percentage when compared to latencies for bigger payloads. This is because of the majority of the time for smaller payloads is spent on the network roundTrip and if we increase the number of network roundTrips, then this time is bound to increase. So if we can batch the write requests into a single write request and then write it to a Kafka partition, then the overhead of this approach should be really minimal.

Also, there is one important optimization that we can do in this current design to improve the write latency.

In the current design, we have N Kafka partitions for these n replicas. If for these n replicas we have a single Kafka partition instead of the N Kafka partitions, then we will have better write throughput as we need not make write request to every Kafka partition (n Kafka partitions in total).

The only requirement is that all of these n Kafka consumers (running on n different replicas) should belong to different consumer groups because Kafka does not allow any two consumers of a single consumer group to consume the same KTP.

So having these n Kafka consumers each belonging to n different consumer groups, should improve the write throughput and latency as these n Kafka consumers will read the WRITE Requests from a single KTP.

Figure: Diagram explaining the working of replication with single partition used across all replicas

In every stateful system, we are trying to solve the same problem of replication over and over. However, we now know that we can delegate this meticulous task to some other service (such as Kafka) and ensure all the replicas for your stateful system can reconstruct the state of the primary server.

For additional context, check out this brief library to show you how you can leverage Kafka to build replicated systems. This library can be plugged in any stateful system.

By implementing the APIs for this library in your master and replica nodes, you will get eventually consistent replicas for your primary stateful system out of the box.

Reduce downtime and move from reactive to proactive monitoring.

Build, run, and secure modern applications and cloud infrastructures.

Start free trial