Linux - Cloud Security Monitoring and Analytics - OpenTelemetry

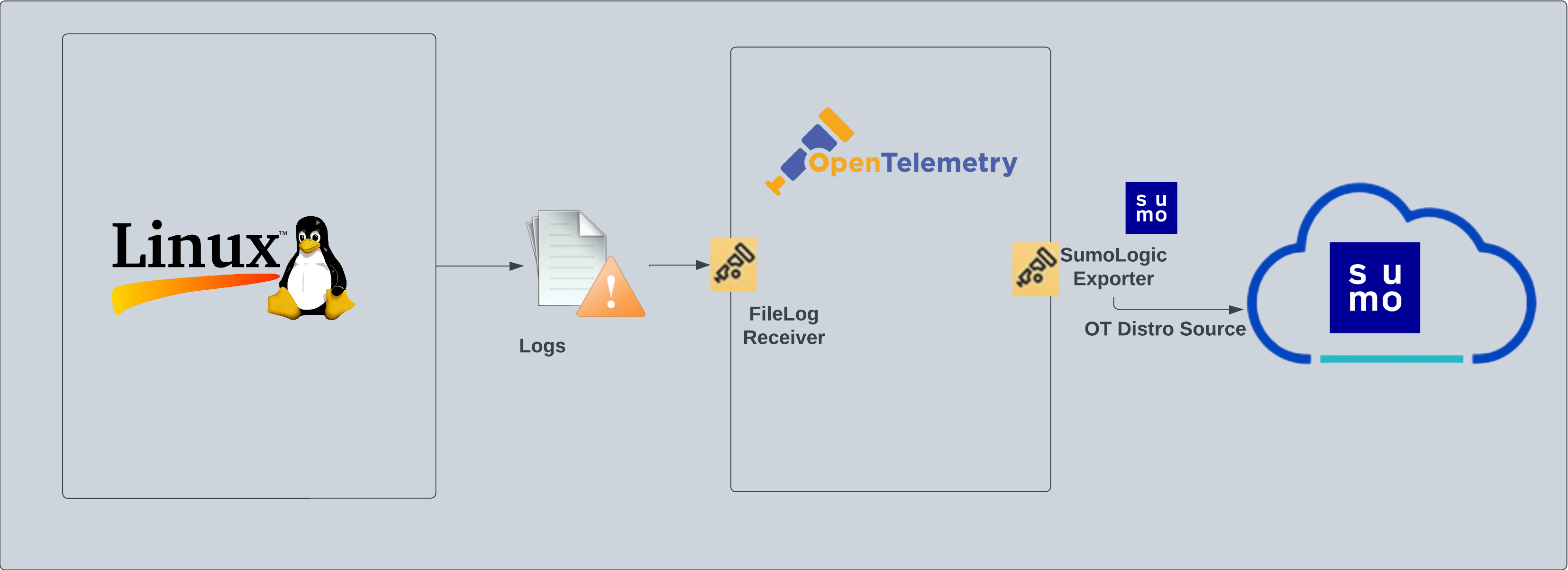

Linux - Cloud Security Monitoring and Analytics - OpenTelemetry is a unified log app that ingests distribution of Linux data to Sumo Logic via OpenTelemetry filelog receiver. The app's preconfigured dashboards provide insight into user, service, systems, login, and privilege activity, providing a better understanding of your production environments and surface relevant insights by tuning out-of-the-box content to align with your security team's focus.

Fields created in Sumo Logic for Linux - Security Analytics

The following tag will be created as part of Linux app installation, if not already present.

sumo.datasource. Has a fixed value of linux.

Prereqisites

This app is based on the following log files from the Ubuntu Linux machine.

- auth.log

- syslog

- daemon.log

- dpkg.log

- kern.log

- CentOS, Amazon Linux, and Red Hat

- audit/audit.log

- secure

- messages

- yum.log

If you've already configured collection of these log files (for example, during Linux or Linux - PCI app setup), then no additional log file collection is required. If any of the log files are missing, you can configure the missing file collection in the next step.

For Linux systems with ACL Support, the otelcol install process should have created the ACL grants necessary for the otelcol system user to access default log locations. You can verify the active ACL grants using the getfacl command. Install the ACL in your Linux environment, if not installed.

The required ACL may not be supported for some rare cases, for example, Linux OS Distro, which is officially not supported by Sumo Logic. In this case, you can run the following command to explicitly grant the permissions.

sudo setfacl -R -m d:u:otelcol-sumo:r-x,d:g:otelcol-sumo:r-x,u:otelcol-sumo:r-x,g:otelcol-sumo:r-x <PATH_TO_LOG_FILE>

Run the above command for all the log files in the directory that need to be ingested, which are not residing in the default location.

If Linux ACL Support is not available, traditional Unix-styled user and group permission must be modified. It should be sufficient to add the otelcol system user to the specific group that has access to the log files.

Collection configuration and app installation

Follow these steps to set up and deploy the source template to collect data in Sumo Logic from a remotely managed OpenTelemetry collector.

Step 1: Set up remotely managed OpenTelemetry collector

If you want to configure your source locally, you can do so by downloading the YAML file. For details, see Configure OpenTelemetry collectors locally.

In this step, we'll install remotely managed OpenTelemetry collector and add a uniquely identifiable tag to these collectors.

- Classic UI. In the main Sumo Logic menu, Select App Catalog.

New UI. In the Sumo Logic main menu select App Catalog. - In the 🔎 Search Apps field, run a search for your desired OpenTelemetry app, then select it. For example, Apache - OpenTelemetry.

- Click the Manage dropdown and then select Add Collection.

- On the Set Up Collector page, select Add a New Collector and then click Next.

If you want to use an existing OpenTelemetry Collector, you can skip this step by selecting the Use an existing Collector option.

- Select the platform where you want to install the Sumo Logic OpenTelemetry Collector. For example, Linux.

This will generate a command that you can execute in the machine environment you need to monitor. Once executed, it will install the Sumo Logic OpenTelemetry Collector. The installation commands now include a

The installation commands now include a --remotely-managedflag. When this flag is used, a remotely managed collector is installed automatically instead of a locally managed one. If you prefer to use a locally managed collector, simply remove the flag from the installation command.

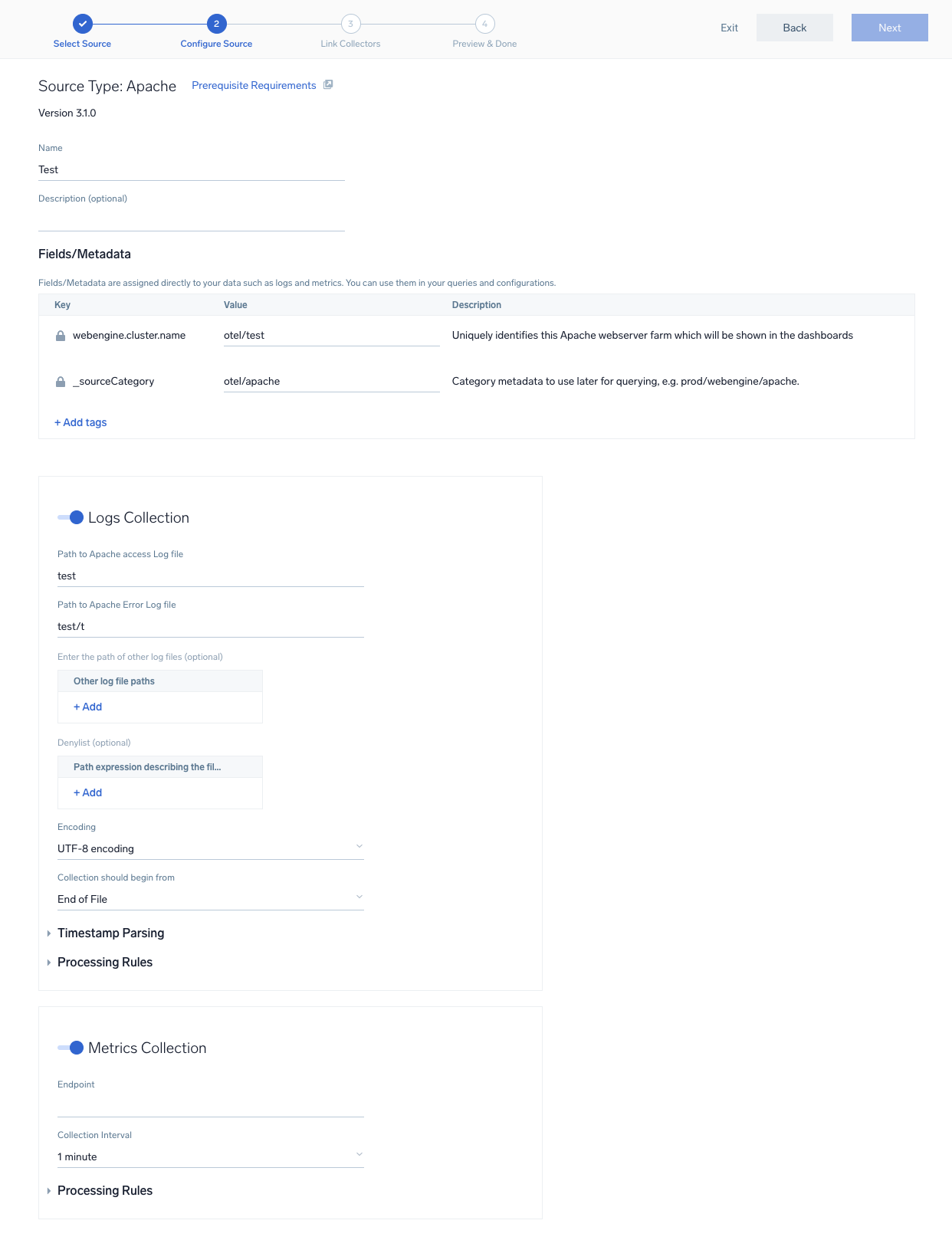

Step 2: Configure the source template

In this step, you will configure the YAML required for Linux Collection. Below are the inputs required for configuration:

- Name. Name of the source template.

- Description. Description for the source template.

Logs collection

- Fields/Metadata. You can provide any customer fields to be tagged with the data collected. By default, sumo tags

_sourceCategorywith the value otel/linux. - Logs. The following fields are pre-populated with default paths, for common log files that are used in different Linux distributions. Not all paths might be relevant for your operating system. Modify the list of files as required or leave the default values.

Metrics collection

- Metrics. Select the metric scrappers you want to enable. By default, metric collection for CPU, memory, disk, load, file system, network, and paging are enabled and process metric collection is disabled.

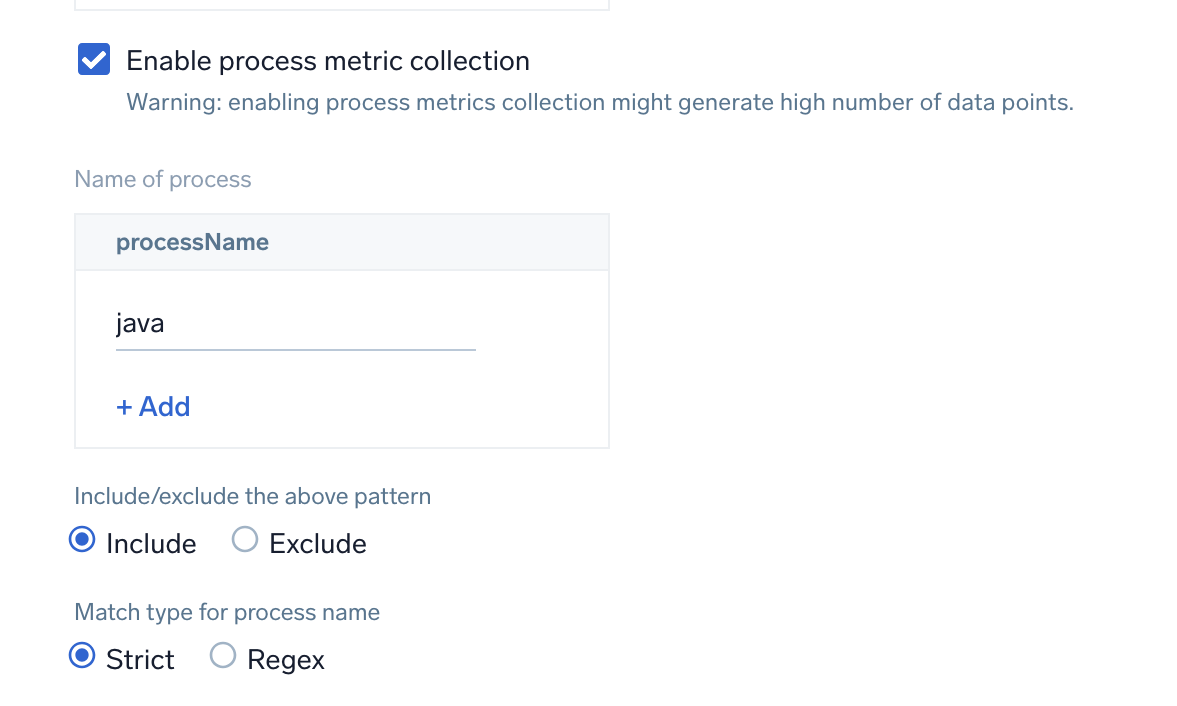

Enable process metric collection (optional)

By default, the collector will not send process metrics to Sumo Logic. This is because the number of processes running on a host can be very large, which would result in a significant increase in Data Points per Minute (DPM).

Click the Enable process metric collection checkbox to collect process-level metrics.

- Name of process. Add the list of process names.

- Include/Exclude the above pattern. Signifies if you want to exclude or include the metrics for the processes listed previously.

- Match type for process name. Select if the process name given should be considered for a strict match with the host machine processes or if it should be considered as regex when matching.

If you need to edit the process list in the future, you can do this manually in the OTEL config yaml by adding or removing in the names list under process scrapper.

process:

include:

names: [ <process name1>, <process name2> ... ]

match_type: <strict|regexp>

- Scan Interval. The frequency at which the source is scanned.

- Processing Rules. You can add processing rules for logs/metrics collected. To learn more, refer to Processing Rules.

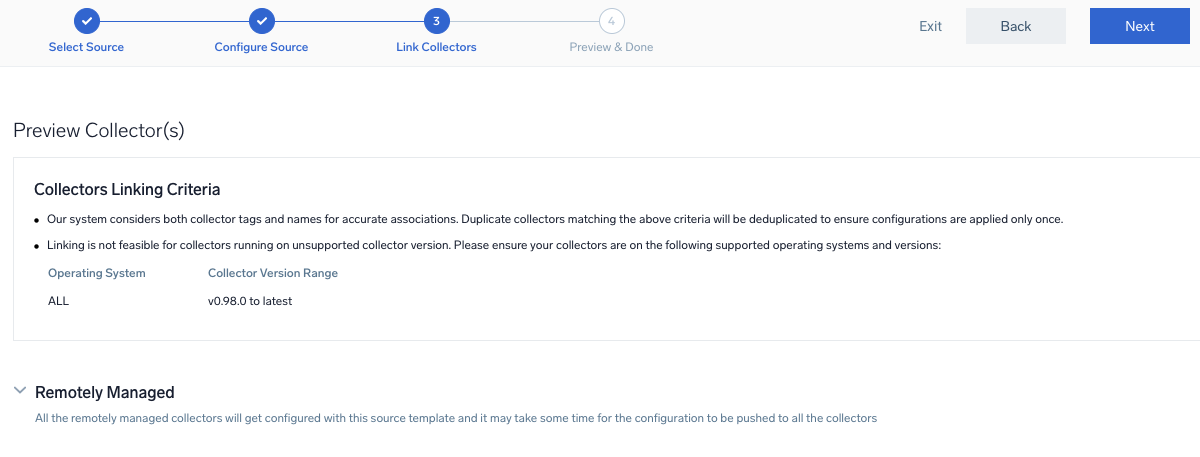

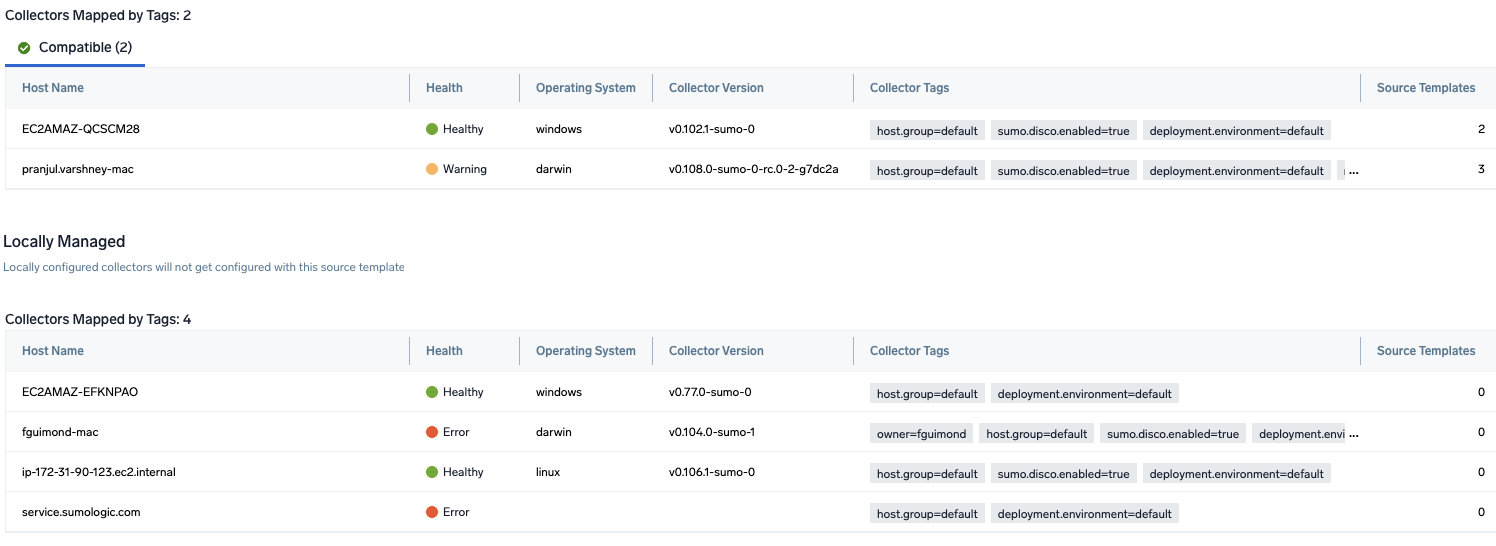

Step 3: Push the source template to the desired remotely managed collectors

A new source template will always be created with the latest version of the source template.

Follow the below steps to create a data collection configuration to gather the required logs and link them to all the collectors with the help of collector tags.

- Complete the source template form with the name and file path for your logs (for example, error logs or access logs), then click Next.

- Under Link Collectors, you will have the option to link the collectors using the collector name or by adding tags to find the group of collectors (for example,

application = Apache).

- Preview and confirm the collectors that will be linked (fetched automatically) to the newly created source template.

- Click Next to complete the source template creation. In the background, the system will apply the configuration to all the linked collectors and will start collecting the respective telemetry data from the remote host (in the example, it would start collecting Apache error logs).

- Click the Log Search or Metrics Search icons to search for and analyze your data collected for this source template.

Sample log messages

Dec 13 04:44:00 <1> [zypper++] Summary.cc(readPool):133 I_TsU(27372)Mesa-libGL1-8.0.4-20.4.1.i586(@System)

Sample queries

sumo.datasource=linux deployment.environment=* host.group=* host.name=*

| parse regex "\S*\s+\d+\s+\d+:\d+:\d+\s+(?<dest_host>\S*)\s+(?<process>\w*)(?:\[\d+\]:|:)\s*(?<message>.+)$" nodrop

| if (isEmpty(dest_host), _sourceHost, dest_host) as dest_host

| parse regex "(?<service>\w*)\[\d+\]:\s+"

| where !isEmpty(service)

| where dest_host matches "*"

| where process matches "*"

| count as eventCount, first(_messagetime) as latest, last(_messagetime) as earliest by service, dest_host

| formatDate(fromMillis(latest),"MM/dd/yyyy HH:mm:ss Z") as %"LatestTime"

| formatDate(fromMillis(earliest),"MM/dd/yyyy HH:mm:ss Z") as %"EarliestTime"

| fields -latest, earliest, eventCount

| sort by %"LatestTime"

Viewing Linux - Cloud Security Monitoring and Analytics dashboards

All dashboards have a set of filters that you can apply to the entire dashboard. Use these filters to drill down and examine the data to a granular level.

- You can change the time range for a dashboard or panel by selecting a predefined interval from a drop-down list, choosing a recently used time range, or specifying custom dates and times. Learn more.

- If required, configure the refresh interval rate for a dashboard or panel by clicking the drop-down arrow next to the refresh icon.

- Click the funnel icon in the dashboard top menu bar to filter the dashboard with template variables.

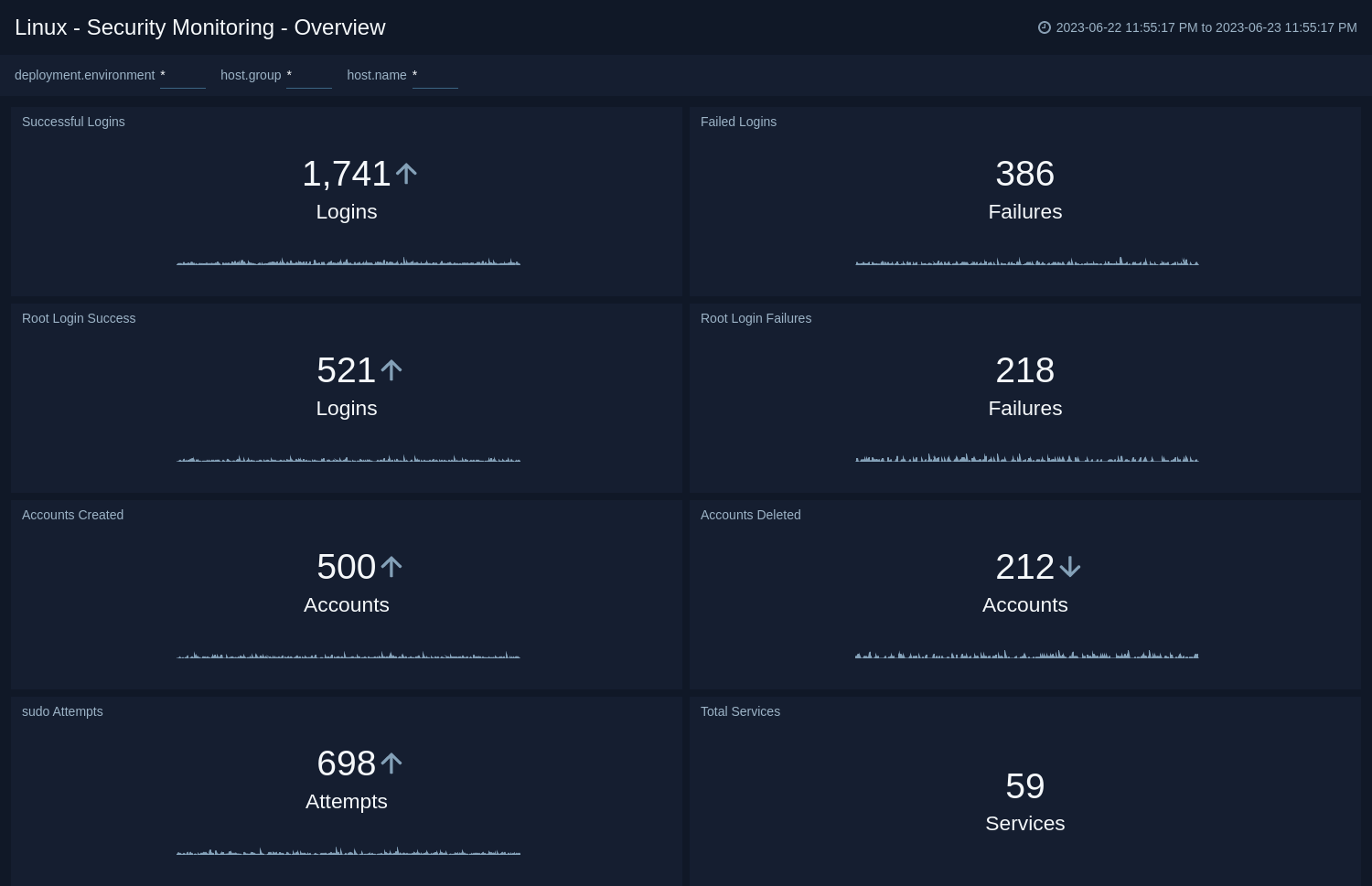

Overview

The Linux - Security Analytics - Overview dashboard provides an overview of security statistics relevant for Linux systems and presents information about successful and failed logins, root login successes and failures, user accounts created and deleted, sudo attempts, and total services.

Use this dashboard to:

- Monitor administrative actions (create, delete users) performed by end users.

- Ensure proper services are running on all systems.

- Detect attempts to change the system time.

- Verify the status of critical systems.

- Monitor excessive failed login attempts and detect total number of attempts to break into the system.

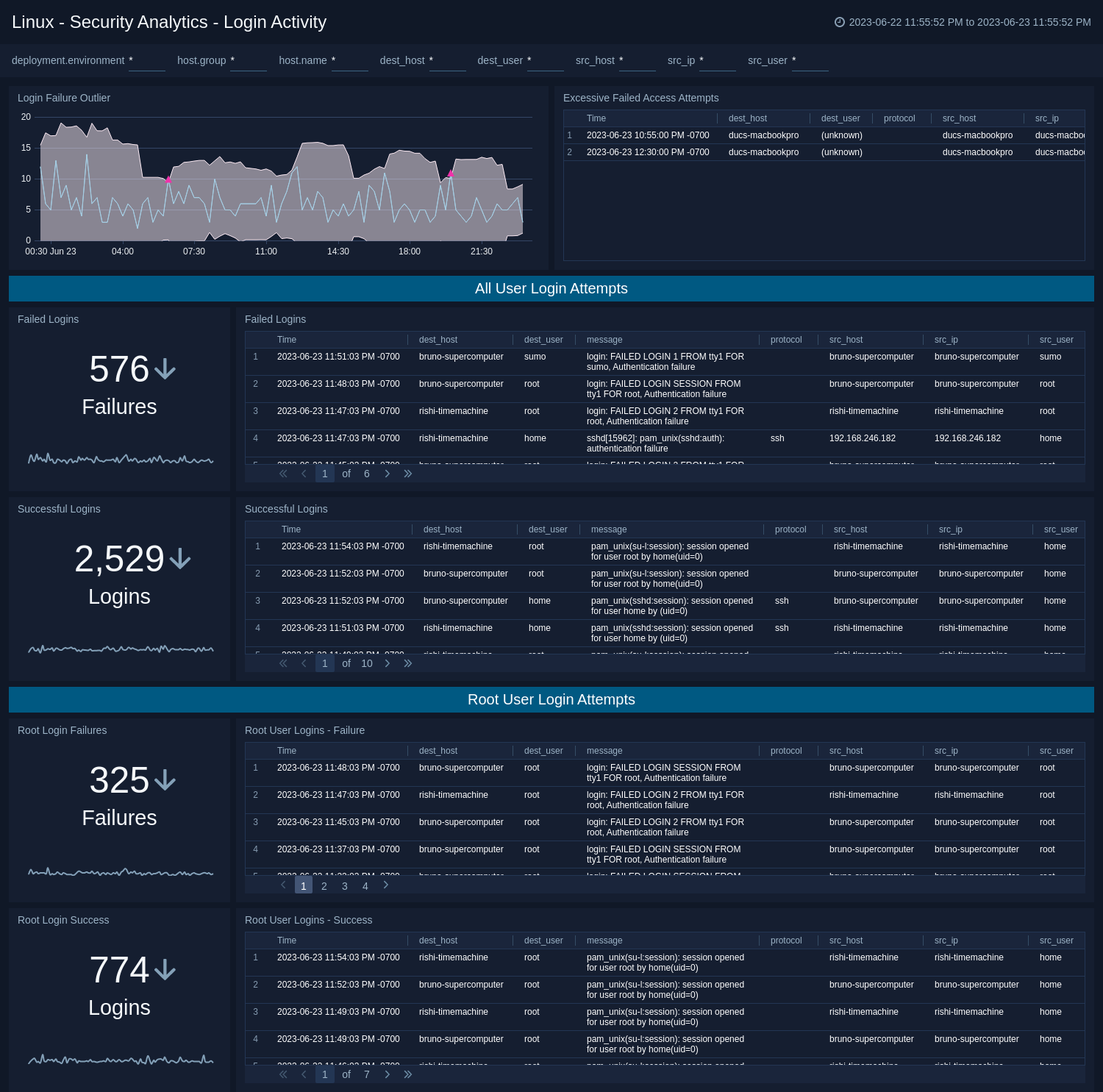

Login Activity

The Linux - Security Analytics - Login Activity dashboard tracks the login activity and provides information about failed and successful user and root logins.

Use this dashboard to:

- Monitor access to the Linux computing environment.

- Monitor failed and successful user logins.

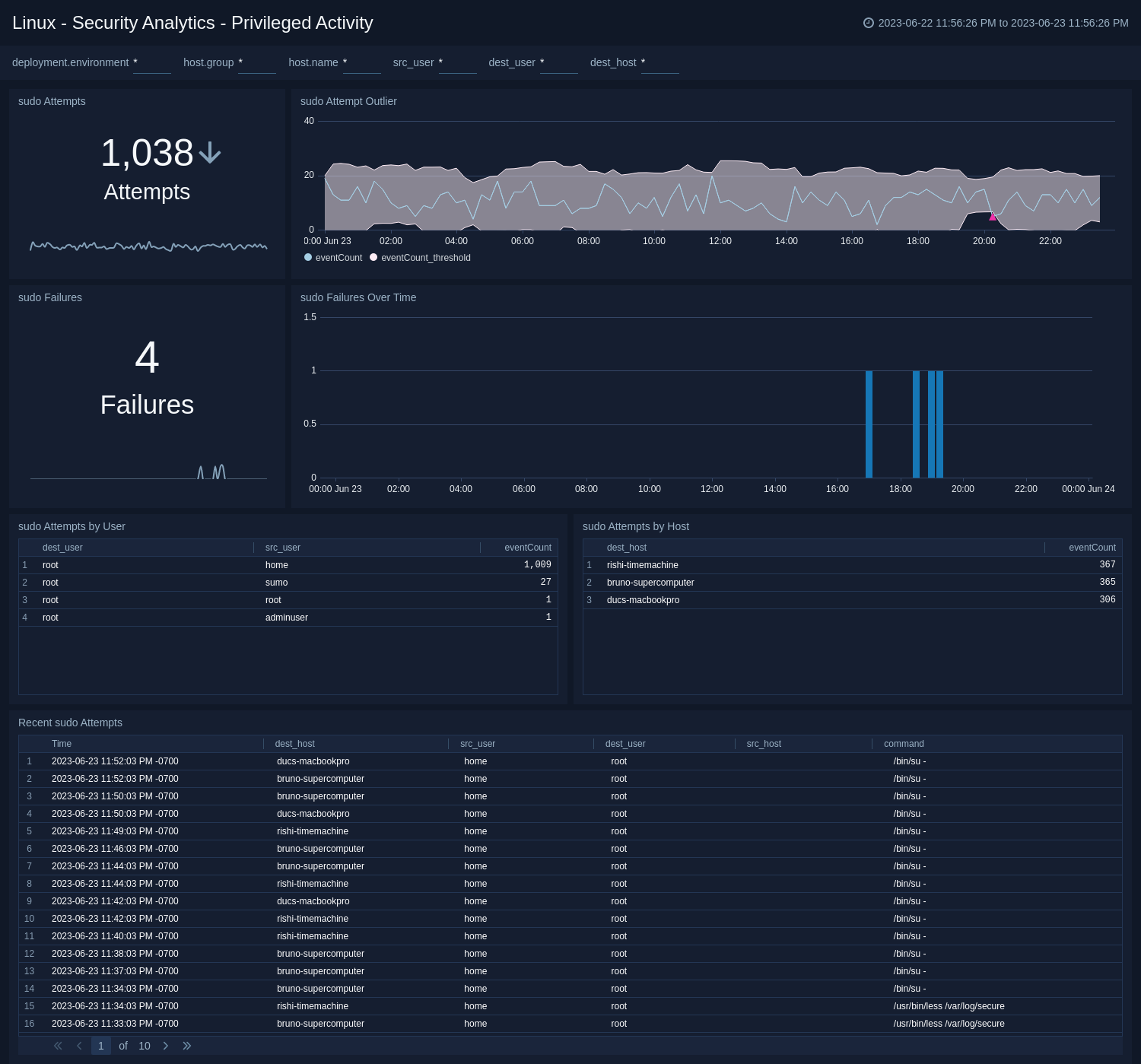

Privileged Activity

The Linux - Security Analytics - Privileged Activity dashboard provides information about total sudo attempts, failed sudo attempts, top 10 users and hosts that have issued sudo attempts, recent sudo attempts, and sudo attempts over time.

Use this dashboard to:

- Monitor successful and failed access attempts to systemswith administrative privileges.

- Monitor actions performed by users with administrative privileges.

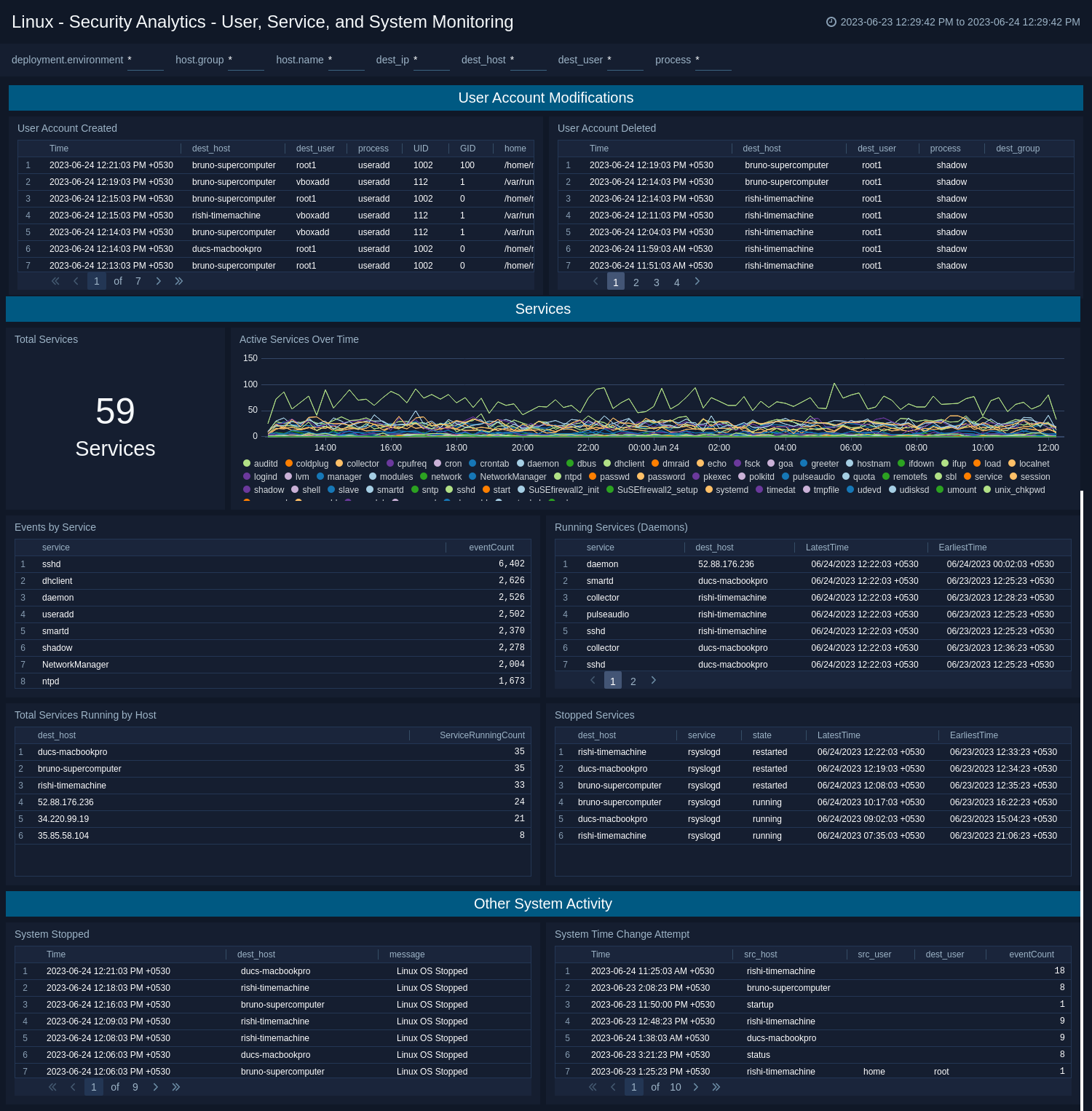

User, Service, and System Monitoring

The Linux - Security Analytics - User, Service, and System Monitoring dashboard provides information about total sudo attempts, failed sudo attempts, top 10 users and hosts that have issued sudo attempts, recent sudo attempts, and sudo attempts over time.

Use this dashboard to:

- Monitor accounts created and deleted.

- Monitor service usage and other system activity.

Create monitors for Linux - Cloud Security Monitoring and Analytics app

From your App Catalog:

- From the Sumo Logic navigation, select App Catalog.

- In the Search Apps field, search for and then select your app.

- Make sure the app is installed.

- Navigate to What's Included tab and scroll down to the Monitors section.

- Click Create next to the pre-configured monitors. In the create monitors window, adjust the trigger conditions and notifications settings based on your requirements.

- Scroll down to Monitor Details.

- Under Location click on New Folder.

note

By default, monitor will be saved in the root folder. So to make the maintenance easier, create a new folder in the location of your choice.

- Enter Folder Name. Folder Description is optional.

tip

Using app version in the folder name will be helpful to determine the versioning for future updates.

- Click Create. Once the folder is created, click on Save.

Linux - Cloud Security Monitoring and Analytics alerts

| Name | Description | Alert Condition | Recover Condition |

|---|---|---|---|

Linux CSMA - Excessive Failed Authentication | This alert is triggered when multiple failed login attempts from the same source are detected, indicating potential brute force attempts. | Count > 10 | Count <= 10 |

Linux CSMA - Root Login Detection | This alert is triggered when direct login attempts using the root account are detected, indicating a potential security concern since root logins should typically be disabled. | Count >= 1 | Count < 1 |

Linux CSMA - System Shutdown Detection | This alert is triggered when unexpected system shutdown events are detected through kernel stop messages, which may indicate unauthorized access, malicious activity, power issues, or system failures. | Count > 0 | Count <= 0 |

Linux CSMA - User Account Creation Spike | This alert is triggered when multiple user accounts are created in a short time period, which may indicate automated account creation, compromised systems, or unauthorized bulk user provisioning. | Count > 3 | Count <= 3 |