With approximately 1.145 trillion MB of data generated daily, big data continues to take center stage in the business world. Successfully analyzing the information from these massive data sets can empower nearly every facet of a business. Additionally, the modern world of work has become increasingly digitized, which means technical teams are now essential for every business.

As more businesses adopt hyper-scale cloud providers and microservices for modern applications, machine data produces at a higher rate, contributing to the vast data deluge. But alongside the notable benefits of big data, a laundry list of challenges looms.

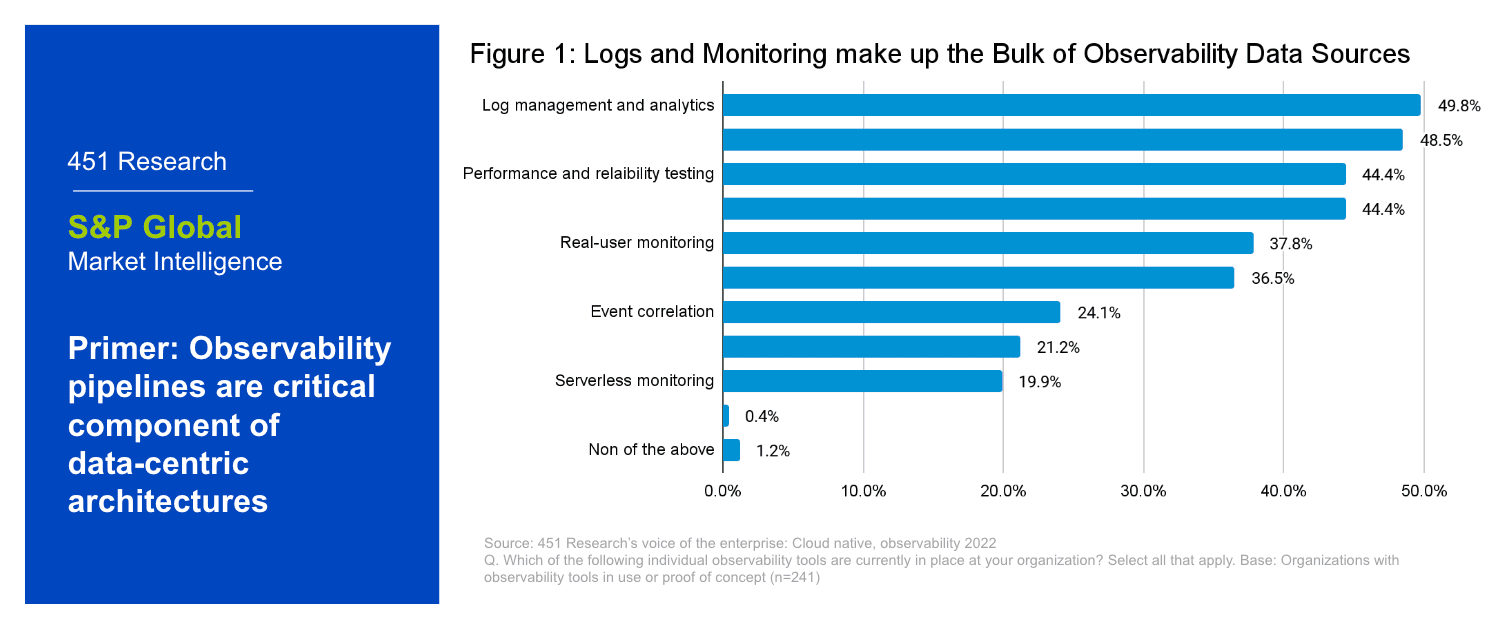

To illustrate, let’s discuss log data: the single largest data set for monitoring, planning, security and optimization. Although many organizations ignored log data’s potential as a resource in the past, it is now widely regarded as the untapped riches of monitoring data.

But, due to its immense scale and complexity, sorting through the mass of unstructured machine-originated log data is a monumental undertaking. A robust log management process makes it possible to organize and analyze these large-volume and high-velocity data sets with ease.

What is a log management process?

At a high level, log management handles every aspect of logs and works to achieve two goals:

- Collect, store, process, synthesize and analyze data for addressing digital performance and reliability, troubleshooting, forensic investigations and obtaining business insights.

- Ensure the reliability, compliance and security of applications and infrastructure across an enterprise’s complete software stack.

Three main components make up a comprehensive log management process: procedures, policies and tools. To provide greater insight into these concepts, Sumo Logic created this guide to explore these components in detail.

Why is log management important?

At its core, log management is the practice of collecting, organizing and correlating enormous quantities of log events in order to generate real-time analytics and insights. This is crucial for several reasons, including:

- Tighter security: Log management tracks all user activities, which aids in preventing, sourcing and minimizing the impact of a data compromise, breaches and other risks.

- Improved system reliability: Monitoring log files keeps DevOps personnel better informed by making it easy to monitor how systems function.Log management also helps teams quickly identify root causes of performance issues when they occur.

- Greater customer insights: The data insights derived from logs and log analytics extend beyond improving security and system performance. This data can also help businesses understand how customers interact with their applications.This includes determining which browsers your customers are using, as well as other behavioral insights.

- Simplified data observability: Log management allows data to be gathered from disparate applications and stored in one place. This simplifies the process of obtaining application performance, business analytics and insights for troubleshooting.

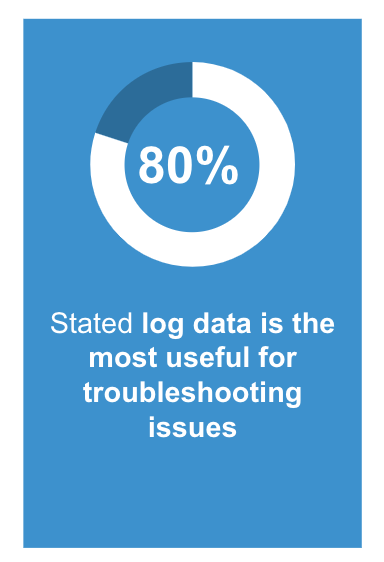

Survey from Sumo Logic customers in February 2023 on the most useful type of data for troubleshooting issues.

What are the log management process steps?

Although the specifics vary from organization to organization, five basic steps occur in every log management process.

1. Instrument and collect

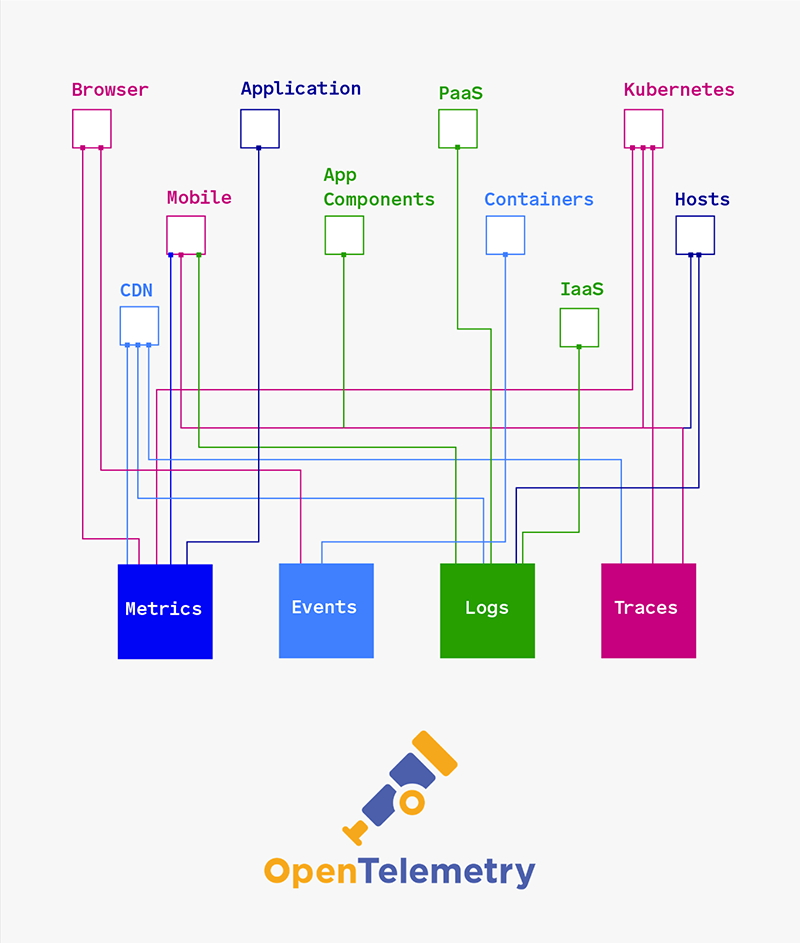

For the first step in the log management process, collect from separate locations throughout your tech stack to support log aggregation in step two. Complete this procedure using a log collector tool. Many organizations use conventional tools like rsync or cron to collect log data, but these tools don’t support real-time monitoring. Collecting all logs is critical to maintaining ongoing monitoring and review processes. Also worth mentioning, OpenTelemetry tools for open-source collection can generate, gather and export all machine data. This provides increased visibility into all layers of your tech stack, enabling greater observability.

OpenTelemetry is a collection of tools, APIs, and SDKs you can use to instrument, generate, collect, and export all machine data.

It is becoming the gold standard for collecting machine data.

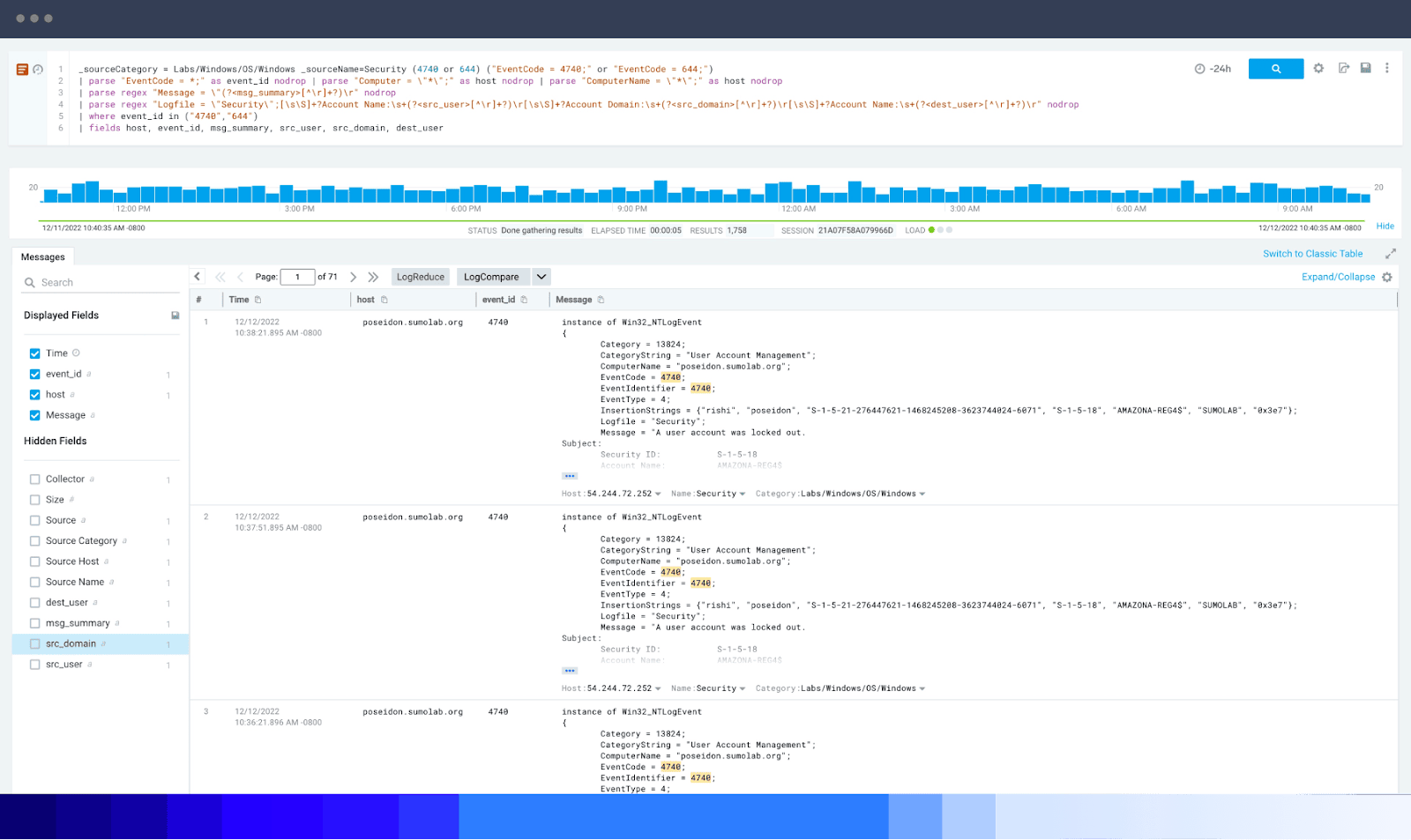

In addition to collecting logs, parsing is another important component of this initial procedure. Log parsing extracts log templates and parameters to interpret log files. This enables a log management system to read, catalog and store the data included in each file. Log parsing applies to semi-structured and unstructured use cases, but unstructured data requires additional heavy lifting. In short, the parsing process takes data that is difficult to digest and converts it into a more readable format.

Parsing can get tricky when using conventional tools. Because log data comes in many formats, traditional tools’ parsing capabilities do not extend across all log file formats. This means users are often required to create custom parsers, which can be a huge time suck. To fix this issue, tools like Sumo Logic can extract fields from log messages, regardless of format. If you are interested in learning more, check out our parse operators resource.

2. Centralize and index

Once all log files and data have been collected and parsed, the next step is to complete aggregation. Log aggregation involves consolidating all logs in one central place, on one platform, to create a single source of truth. While this takes care of consolidation, indexing enables searchability and allows IT and security teams to source the information they need efficiently.

Having precious data strewn about your IT infrastructure creates enormous inefficiencies and heightens the risk of a security breach. Collecting the same log data multiple times for different use cases increases the cost to acquire that data.

Let’s look at log management in cyber security to further illustrate why aggregation is so important. Security analysts often rely on log event data to determine a breach’s root cause and severity. Having log data spread across various locations makes it much more difficult for analysts to find the information they need to eradicate the cyber threat. Centralizing and indexing this information offers a consolidated view of all activity across the network, making it easier to identify threats and troubleshoot other issues immediately. Having a consolidated view of log data should be top of mind for most organizations, as the rapid and sudden increase in remote work has ignited new challenges regarding cyber and data security.

Store all structured and unstructured logs and security events in a single security data lake for maximum efficiency.

In the past, many organizations have relied solely on Syslog shippers to transfer log data to a central location. Although this simple method works, Syslog is often difficult to scale. Sumo Logic’s cloud-native platform and 300+ applications and integrations solve inefficiencies with legacy products — like Syslog shippers — and simplify the aggregation process across your tech stack.

3. Search/query and analyze

After aggregation is complete, it is time to analyze log data. In simple terms, log analysis is the practice of reviewing and understanding log data. The ultimate goal of log analysis is to help businesses organize and extract useful analytics and insights from their centralized logs. Like all procedures in log management, log analysis is complex and consists of many layers.

In the following sections, we’ll explore some of the most important common questions surrounding log analysis.

How does log analysis work?

Several methods and functions enable you to pull valuable analytics from log files in the analysis process:

- Normalization is a data management methodology designed to improve data integrity by converting data into a standard format.

- Pattern recognition relies on machine learning and log analysis software to determine which log messages are relevant and which are irrelevant.Without a method for determining the value of that data, logs are less like an information gold mine and more like a black hole.

- Classification and tagging help you visualize data. This function groups log entries into different classes so all similar entries stick together. Classification and tagging make it much easier for you to search through log data efficiently.

- Correlation analysis comes into play when reviewing a specific event, good or bad. This process involves gathering log information from all systems to evaluate and identify correlations between the log data and the event you are investigating.

Deep interrogation through robust log queries of all datasets accelerates threat detection and troubleshooting performance issues.

What are the challenges of log analysis?

Logs are the most comprehensive of all types of big data. Their extensive detail makes them ideal sources of information for analyzing the state of any business system. However, the large scale and complex nature of log data make it challenging to analyze for several reasons:

- Log data comes in many varieties, is mostly unstructured and is generated in mass quantities, making it difficult for relational databases to analyze.

- Inconsistent log formatting is a pervasive issue. Because all applications and devices approach logging uniquely, it takes a lot of work to standardize.

- Many teams need help to right-size their log storage. Log files can quickly become cluttered, which leads to surging cloud storage costs.

Tools for large datasets meet these challenges. Take Sumo Logic, for example. Sumo Logic’s user-friendly automated platform provides real-time analytics to help you rapidly review log data and much more. No more time-consuming and complex installations or upgrades, and no more managing and scaling hardware or storage.

How can a log analysis practice be improved?

The benefits of log analysis are impressive. But if you don’t mitigate the above challenges, all you have is a pile of unactionable data. Optimize your log data to obtain true value from your log management processes and log file analysis.

Log analyzers and other log management tools can optimize the processes and provide meaningful insights from log data. Adopting these tools enables developers, security and operations teams to seamlessly monitor all business applications and visualize log data to obtain greater context.

4. Monitor and alert

After analysis, you can move on to the monitoring and alerting phase. But what is the purpose of log monitoring? Monitoring log data maintains situational awareness. The versatile information collected from log files can inform many processes, including monitoring system performance. After data exits the log analysis process and IT teams can see where inefficiencies and errors are commonly occurring, they can create alerts to notify all the right parties through proper channels. Log monitoring procedures evaluate the health of your systems, identify potential risks and prevent security breaches.

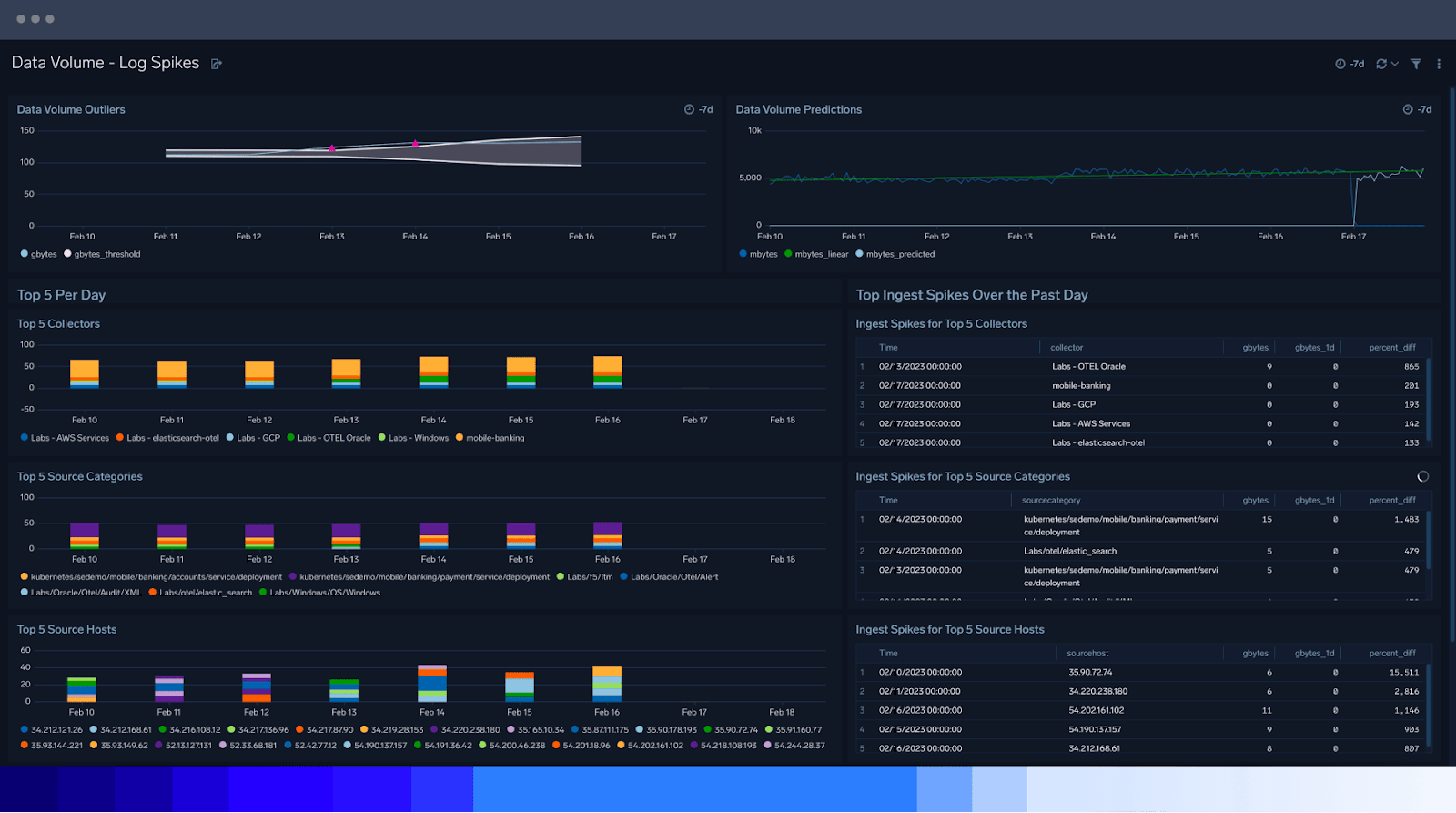

5. Reporting and dashboarding

After collecting, analyzing and monitoring, it is time to report your progress and findings. This involves visualizing important data and metrics unearthed throughout the log management process. The goals of reporting and dashboarding are twofold:

- While standard dashboards can get you started quickly, use custom dashboards to get specific analytics based on use cases.

- Provide secure access to confidential security logs and other sensitive information.

- Ensure all decision-makers and stakeholders understand the discoveries made during the log management process.

What is a log management policy?

A log management policy defines guidelines and processes for generating, collecting, storing, analyzing and reporting log data. This policy should be comprehensive, encompassing an organization’s entire IT environment and instructing all individuals who interact with log data.

To create a successful log management policy, businesses should:

- Keep things relatively simple and scalable. It is easy to overlook requirements that are too unwieldy or rigid.

- Understand your log-level usage and storage constraints to plan.

- Review all regulations and develop guidelines that will promote compliance. This will also help you decide who can access what data to set appropriate RBAC parameters.

Logging policy example: NIST 800-53 logging and monitoring policy

NIST 800-53 is a North American cybersecurity standard and compliance framework created by the National Institute of Standards in Technology (NIST). Although only federal institutions are required to follow NIST 800-53 regulations, any business can adopt a similar set of policies.

In NIST 800-53, logging requirements and regulations are outlined in Special Publication (SP) 800-82. The log management standard SP 800-82 “provides practical, real-world guidance on developing, implementing and maintaining effective log management practices throughout an enterprise.” Guidelines for the following concepts appear throughout the publication:

- Log management infrastructure

- Log management planning

- Log management operational processes

Of course, SP 800-82 is not the only log management policy out there. Many countries have developed their own methods for regulating log management processes. For example, the Ministry of Justice and National Cyber Security Centre both have a hand in crafting log management policy for the United Kingdom.

What are log management tools?

As companies navigate expansive tech stacks and a sizable increase in logging data, they must have a system to process and manage all this information. At a high level, log management tools provide comprehensive and actionable insights into systems’ overall health and operational performance. They automate processes involved with log management to maintain compliance while generating operational, security and business insights. Recently, there has been a heavy push to make log management systems more user-friendly and easier for non-IT teams to navigate. This benefits collaboration and communication throughout any organization.

On a micro level, key features of log management tools include:

- Data cleansing capabilities

- Aggregate logging

- Automated log discovery and monitoring

- Query language-based search

- Log archiving and indexing

- Automated parsing

- Trend- and count-based alerting

Many companies need log management and analytics systems that go beyond the basics. Sumo Logic goes the extra mile with these and other features:

- Real-time analytics

- Single source solution made to eliminate data silos

- Integrated observability and security

- Highly scalable log analytics

- Cloud-native distributed architecture

- Flexible, cost-effective licensing

- Enterprise-grade and secure multi-tenant architecture

- Instantly scalable analytics

- Standard OOTB and customizable dashboards

- Integrated full-stack capabilities

Discover more log management best practices

In the end, a comprehensive and effective log management process leads to greater security, better insights into all business processes and more satisfied customers. If you are interested in learning more about log management and analytics, explore our resource repository, read our case studies or start a free trial to see if Sumo Logic is the right fit for your business.