Kubernetes is a best-in-class container orchestration solution, while Docker is the most widely used containerization platform. Though often compared as alternative solutions, they aren’t direct competitors. Docker is a container technology platform, and Kubernetes is a container orchestrator for platforms like Docker. In fact, Kubernetes often relies on container runtimes like Docker to function, making them complementary technologies.

So, what’s the difference between Docker and Kubernetes? We’ll break down the relationship between Kubernetes and Docker, clear up common misconceptions, and explain what people really mean when they compare Docker and Kubernetes.

Kubernetes vs Docker: Understanding containerization

You can’t talk about Docker without first exploring containers.

Containers solve a critical issue in application development. When developers write code, they’re working on their own local development environment. But when they move that code to production, problems arise. The code that worked perfectly on their machine doesn’t work in production due to different operating systems, dependencies, and libraries.

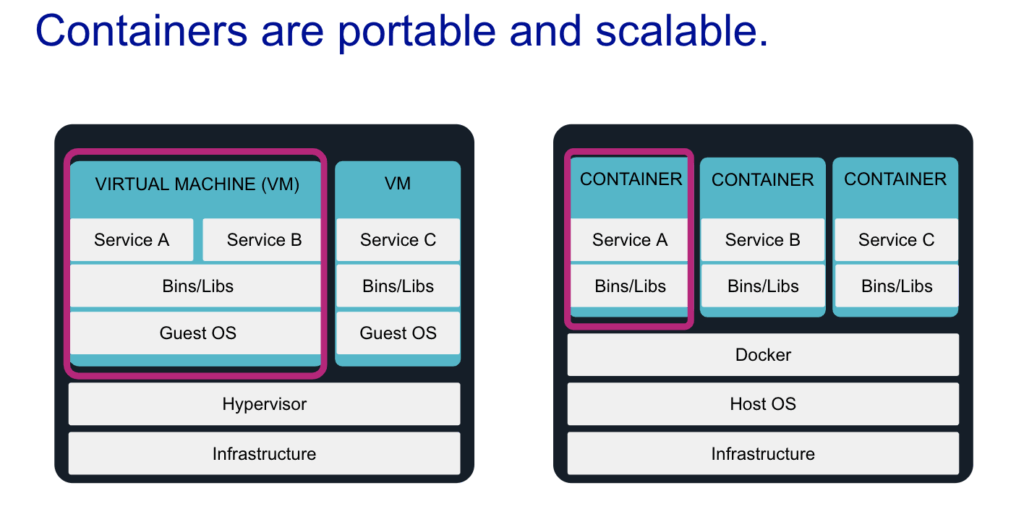

Multiple containers solved this critical issue of portability, allowing you to separate code from the underlying infrastructure on which it runs. Developers could package up their application (including all of the bins and libraries it needs to run correctly) into a small container image. In production, that container can be run on any computer that has a containerization platform.

Why use containers instead of virtual machines?

Managing containers and container platforms provides many advantages over traditional virtualization.

Containers have an extremely small footprint. They just need their application and a definition of all the bins and libraries they require to run. Unlike virtual machines (VMs), which each have a complete copy of a guest operating system, container isolation is done on the kernel level without the need for a guest operating system.

Because container image layers are cached and reused, similar containers can avoid duplicating the same dependencies on disk, reducing image bloat and further saving space. For example, if you have three applications that all run on Node and Express, you don’t need three separate instances of those frameworks. The containers can share those bins and libraries across instances.

Encapsulating applications in self-contained environments allows for quicker deployments, closer parity between development environments, and infinite scalability.

What is Docker?

Docker is currently the most popular container technology and is widely used by site reliability engineers (SREs), DevOps and DevSecOps teams, developers, testers, and system admins.

Docker appeared on the market at the right time and was open source from the beginning, which likely led to its current market domination. According to Flexera’s 2023 State of the Cloud report, 39% of enterprises currently use Docker in their AWS environment, and that number continues to grow.

Key Docker features

When most people talk about Docker, they’re talking about the Docker Engine, the runtime that allows you to build and run containers. But before you can run a Docker container, it must be built, starting with a Dockerfile.

- Dockerfile: Defines everything needed to build a container image, including the base OS, installed packages, file paths, and exposed ports.

- Docker Image: A portable, static blueprint used to create running containers. Once you have a Dockerfile, you can start building a Docker Image, which gets run on the Docker Engine.

- Docker Hub: A public registry to share and reuse images.

What is Kubernetes?

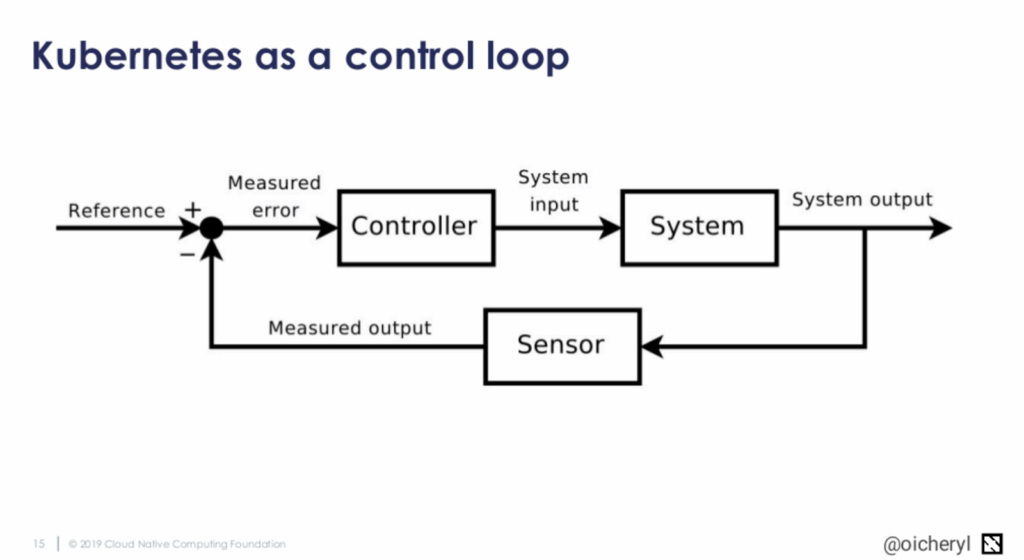

It’s easy to get lost in the details of Kubernetes, but at the end of the day, what Kubernetes is doing is pretty simple. Kubernetes is a powerful container orchestration platform developed at Google and now maintained by the Cloud Native Computing Foundation (CNCF). It automates deployment, scaling, and management of containerized applications across clusters of machines.

For example, you can decide how you want your system to look (three copies of container image A and two copies of container image B), and Kubernetes will make that happen. Kubernetes compares the desired state to the actual state, and if they aren’t the same, it takes steps to correct them.

Kubernetes is the market leader and the standardized means of orchestrating containers and deploying distributed applications. It can be run on a public cloud service or on-premises is highly modular, open source, and has a vibrant community. Companies of all sizes invest in it, and many cloud computing providers offer Kubernetes as a service. Sumo Logic provides support for all orchestration technologies, including Kubernetes-powered applications.

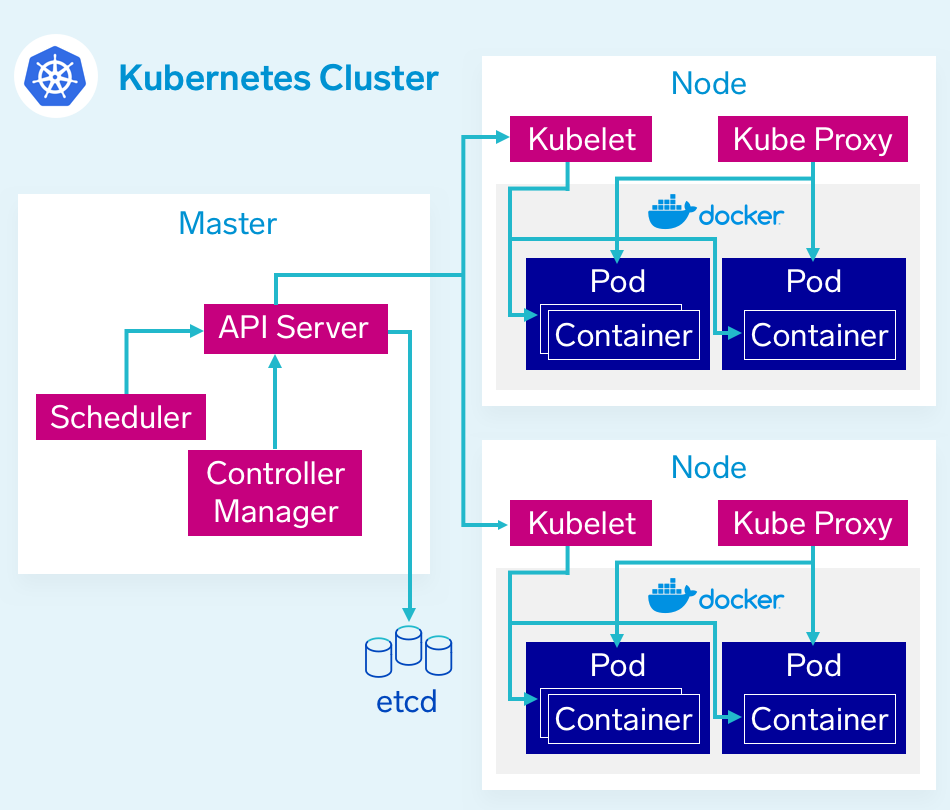

Key components of the Kubernetes architecture

Kubernetes is made up of many components that don’t know or care about each other. The components talk to each other through the API server. Each component operates on its own function and exposes metrics that we can collect for monitoring later. We can break down the components into three main parts.

- Control plane: The control plane, or the master node, orchestrates cluster activities via components like the API server, scheduler, and controller manager.

- Nodes: Machines (physical or virtual) that run workloads and make up the collective compute power of the Kubernetes cluster. Nodes are where containers get deployed to run and are the physical infrastructure that your application runs on.

- Pods: The smallest deployable unit in the Kubernetes cluster, often containing a single container. When defining your cluster, limits are set for pods, which define what resources, CPU and memory, they need to run. The scheduler uses this definition to decide on which nodes to place the pods. If there’s more than one container in a pod, it’ll be difficult to estimate the required resources, and the scheduler can’t appropriately place pods.

Docker vs. Kubernetes: Do you need both?

While you can use Docker without Kubernetes, Kubernetes relies on a container runtime, which can be Docker or others like containerd or CRI-O, to function. In most production setups, Kubernetes + Docker is a common pairing.

Docker without Kubernetes is common in development, but Kubernetes without Docker (using a different container runtime) is increasingly popular in production.

Docker Compose vs Kubernetes

Docker Compose and Kubernetes are both container orchestration frameworks. The key difference between them is that Kubernetes can run containers on several virtual or real computers, while Docker Compose can only run containers on a single host machine.

Kubernetes vs Docker Swarm

When most people talk about Kubernetes vs. Docker, they really mean Kubernetes vs. Docker Swarm. Docker Swarm is Docker’s native container orchestration tool, which has the advantage of being tightly integrated into the Docker ecosystem and using its own API.

Like most schedulers, Docker Swarm can administer a large number of containers spread across clusters of servers. Its filtering and scheduling system enables the selection of optimal nodes in a cluster to deploy containers.

Why orchestration systems matter

As Docker containers proliferated, a new problem arose:

- How do you coordinate and schedule multiple containers?

- How do you seamlessly upgrade an application without any downtime?

- How do you monitor the health of an application, know when something goes wrong and seamlessly restart it?

To solve these challenges, container orchestration platforms like Kubernetes, Mesos, and Docker Swarm emerged. These tools make a cluster of machines behave like one big machine, which is vital in a large-scale environment.

The truth is that containers are not easy to manage at volume in a real-world production environment. Containers at volume need an orchestration system so they can:

- Simultaneously handle a large volume of containers and users. An application may have thousands of containers and users interacting with each other at the same time, so managing these interactions requires a comprehensive overall system designed specifically for that purpose.

- Manage service discovery and communication between containers and users. How does a user find a container and stay in contact with it? Providing each microservice with its own, built-in functions for service discovery would be repetitive and highly inefficient at best. In practice, it would likely lead to intolerable slowdowns (or gridlock) at scale.

- Balance loads efficiently. In an ad-hoc, unorchestrated environment, loads at the container level are likely to be based largely on user requirements at the moment, resulting in highly imbalanced loads at the server level. Logjams result from the inefficient allocation and limited availability of containers and system resources. Load balancing replaces this semi-chaos with order and efficient resource allocation.

- Authentication and security. An orchestration system such as Kubernetes makes it easy to handle authentication and security at the infrastructure level, rather than the application level, and to apply consistent policies across all platforms.

- Multi-platform deployment. Orchestration manages the otherwise very complex task of coordinating container operation, microservice availability, and synchronization in a multi-platform, multi-cloud environment.

An orchestration system serves as a dynamic, comprehensive infrastructure for a container-based application, allowing it to operate in a protected, highly organized environment while managing its interactions with the external world.

Kubernetes is now the leading choice for container orchestration, thanks to its modular architecture and vibrant community.

The relationship between Docker and Kubernetes

Kubernetes and Docker are both comprehensive de facto solutions for intelligently managing containerized applications and providing powerful capabilities. From this, some confusion has emerged. “Kubernetes” is now sometimes used as a shorthand for an entire container environment based on Kubernetes. In reality, they are not directly comparable, have different roots, and solve for different things.

Docker is a platform and tool for building, distributing, and running Docker containers. It includes tools like Docker Desktop, Docker CLI, and Docker Daemon.

Kubernetes, on the other hand, is a container orchestration system for Docker containers that’s more extensive than Docker Swarm and coordinates clusters of nodes at scale. It works around the concept of pods, which are scheduling units (and can contain one or more containers) in the Kubernetes ecosystem. They’re distributed among nodes to provide high availability. One can easily run a Docker build on a Kubernetes cluster, but Kubernetes itself is not a complete solution and is meant to include custom plugins.

Kubernetes and Docker are fundamentally different technologies, but they work very well together. Both facilitate the management and deployment of containers in a distributed architecture.

Can you use Docker without Kubernetes?

Docker is commonly used without Kubernetes. While Kubernetes offers many benefits, it’s notoriously complex, and there are many scenarios where the overhead of spinning up Kubernetes is unnecessary or unwanted.

In development environments, it’s common to use Docker without a container orchestrator like Kubernetes. In production environments, the benefits of using a container orchestrator don’t outweigh the cost of added complexity. Additionally, many public cloud services like AWS, GCP, and Azure provide some orchestration capabilities, making the tradeoff of the added complexity unnecessary.

Can you use Kubernetes without Docker?

As Kubernetes is a container orchestrator, it needs a container runtime in order to orchestrate. Kubernetes is most commonly used with Docker, but it can also be used with any container runtime. RunC, cri-o, and containerd are other container runtimes that you can deploy with Kubernetes. The CNCF maintains a listing of endorsed container runtimes on their ecosystem landscape page, and Kubernetes documentation provides specific instructions for getting set up using ContainerD and CRI-O.

Final thoughts: Docker and Kubernetes work best together

What’s the best choice, then? That’s not a trick question. The answer is the obvious one: both.

Although Kubernetes can use other container sources and runtimes, it’s designed to work well with Docker, and much of its documentation was written with Docker in mind.

Together, they deliver:

- Reliable, repeatable container deployment

- Centralized management of container runtime interfaces

- Resilient infrastructure with automated failover

- Support for cloud-native applications at scale

It was never really a question of Kubernetes vs Docker; it was always Kubernetes and Docker, and today this is even more true. For any team working with modern container technologies, adopting both Docker and Kubernetes provides the most robust and scalable foundation for building and running distributed apps.

Discover how Sumo Logic turns Kubernetes and Docker performance data into actionable insights. Sign up for a 30-day free trial.