Extended visibility with logs

Logs for Security, collecting and analyzing critical, often overlooked cloud-specific logs. Integrating logs from AWS, Google Cloud, and Azure delivers comprehensive monitoring to detect risks unique to cloud infrastructure. This expanded coverage empowers security teams to catch threats that standard tools may miss while automated dashboards streamline compliance with frameworks like PCI, eliminating manual work.

Complete visibility and control over your AWS environments

Our unified interface streamlines investigations across cloud providers. With built-in threat intelligence from multiple trusted sources, you can enrich detections instantly with global context—no manual effort is required. Monitor, detect, and respond to threats swiftly, reducing the chance of vulnerabilities going unnoticed and strengthening your overall security posture.

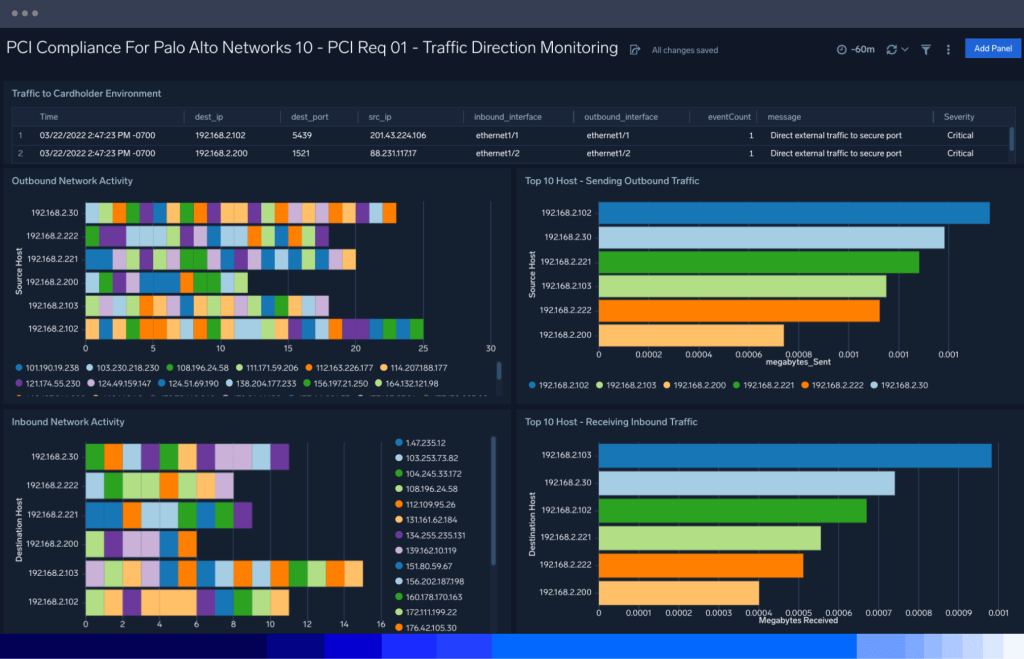

Effortless PCI compliance with real-time monitoring

Cut the challenges of time-consuming audits, manual compliance tracking, and balancing regulatory demands with pre-built PCI compliance dashboards. Our automation and dashboards continuously monitor for compliance violations, accelerating audits and eliminating hours of manual work. With real-time detection of non-compliance and flexible reporting, Sumo Logic helps security teams shift left on compliance.

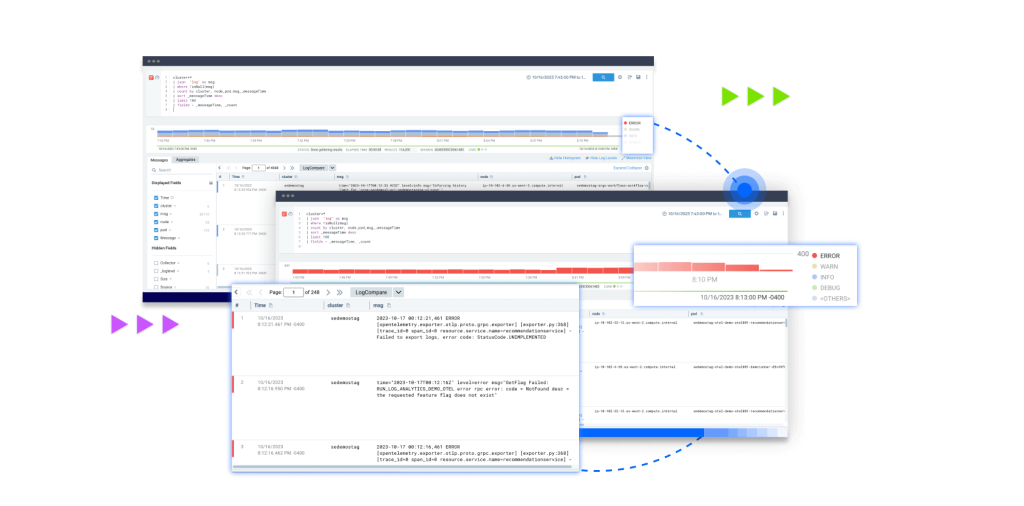

AI-driven investigation and response

Investigate incidents in seconds—not hours. Sumo Logic’s Mo Copilot uses AI-driven summaries and natural language queries to surface likely root causes, prioritize key events, and guide analysts through resolution. Whether junior or expert, your team investigates smarter with fewer clicks.

Know your cloud attack surface

Manage your attack surface, even in complex cloud environments with Sumo Logic’s cloud-scale collection, storage and security analytics.

Combat complexity

Distill insights from across your entire microservices architecture and enable teams to collaborate and resolve the hardest questions facing digital companies.

Increase visibility

Accelerate security and reliability management workflows across development and operations, maintaining security visibility and managing your risk and cloud attack surface.

Maximize efficiency

Enable practitioners of all skill levels to easily manage their cloud attack surface with curated, out-of-the-box AI-driven security content. Security personnel can share dashboards and jointly resolve security issues as they arise from anywhere.

Optimize costs

Flex pricing lets you optimize your data analytics and pay for insights, not ingest. All data are stored compliantly, consistent with an extensive list of regulatory frameworks, without data rehydration or sacrificing on performance.

Unified interface

All teams share visibility, with a single source of truth fueled by logs. Unlimited users and a unified interface powers true DevSecOps.

Anomaly detection

Spot anomalies with precision. AI-driven alerts analyze 60 days of patterns, factoring in seasonality, to detect what’s truly unusual against baselines or expected values.

FAQ

Still have questions?

The cloud attack surface refers to all the potentially exposed applications, networked devices and infrastructure components that threat actors could exploit within a cloud infrastructure or environment. Issues such as unpatched vulnerabilities in microservices architecture and misconfigurations can compromise the security of cloud-based systems, applications and data. The attack surface in a cloud environment is dynamic and can change as the cloud infrastructure evolves and new services, applications and configurations are introduced.

Common components of the cloud attack surface include:

- User accounts and credentials

- Application Programming Interfaces (APIs)

- Cloud databases or object storage

- Network connections, including virtual private clouds (VPCs) and public internet connections

- Virtual machines (VMs) and containers (Kubernetes)

- Data in transit (sent over a network)

- Data at rest (in cloud storage)

Infrastructure security in cloud computing refers to the practices, tools and measures to protect the underlying IT infrastructure and resources that make up a cloud computing environment. This includes safeguarding the physical data centers, servers, networking components and other hardware and the virtualization and management software enabling cloud services. Infrastructure security is a critical aspect of overall cloud security, as the integrity of these components is essential for the secure operation of cloud services.

Cybersecurity refers to the set of processes, policies and techniques that work together to secure an organization against digital attacks. Cloud security is a collection of procedures and technology designed to address external and internal security threats targeting virtual servers or cloud services and apps.

All data at rest within the Sumo Logic system is encrypted using strong AES 256-bit encryption. All spinning disks are encrypted at the OS level and all long-term data storage is encrypted using per-customer keys which are rotated every twenty-four hours.

The purpose of a security compliance audit is to assess and evaluate an organization’s adherence to specific security standards, regulations, or frameworks. Essentially, it answers the question, how are your current security controls meeting the security and privacy requirements of the protected assets?

In addition to assessing compliance, the cybersecurity audit helps identify potential security vulnerabilities, weaknesses, and risks, assesses the effectiveness of a company’s security controls and measures, verifies the existence and adequacy of security-related policies, procedures, and documentation, and ensures organizations meet legal and regulatory requirements of their industry.

In so doing, a cybersecurity compliance audit helps organizations improve their overall security posture and is evidence of an organization’s commitment to protecting its assets from potential threats and risks

Compliance risk management refers to identifying, assessing, and mitigating risks associated with non-compliance with laws, regulations, industry standards, and internal security policy within an organization. It is an ongoing process that requires commitment, resources, and a proactive approach to ensure that an organization operates in a compliant manner. And it involves establishing systematic approaches and controls to ensure that the organization operates within the boundaries of legal and regulatory requirements.

By effectively managing compliance risks, organizations can reduce legal and financial liabilities, protect their reputation, build trust with stakeholders, and create a more sustainable and ethical business environment.

An external audit by a compliance auditor (also known as an external auditor or compliance officer) will conduct an audit process to assess the internal policies of a company’s compliance program and determine if its fulfilling its compliance obligations.

Specific rules may vary depending on the audit framework or standard being used, but there are some general rules that apply universally.

Auditors must maintain independence and objectivity throughout the audit process, thoroughly document the process with a completed report, and adhere to a recognized compliance framework or standard, such as ISO 27001, NIST Cybersecurity Framework, PCI DSS, or industry-specific regulations.

The audit scope should be clearly defined, including the systems, processes and areas of the organization that will be assessed. Audits should take a risk-based approach, identifying and prioritizing higher-risk areas for detailed security assessment. Subsequently, they select a representative sample of systems, processes, or transactions for examination rather than auditing every item.

When it’s time for an audit, the Sumo Logic platform increases understanding, streamlines the auditing process and ensures ongoing compliance with various security regulations and frameworks in the following ways:

- Centralize data collection, capturing a wide range of organizational data from wherever it originates, empowering organizations to monitor and learn from it.

- Make various data types available with 100% visibility and visualize them in compelling, configurable dashboards for real-time monitoring and insights.

- Find any data at any time using query language to create filters and search parameters — whether it relates to regulatory compliance or internal security controls.

- Leverage machine learning analytics to improve and streamline audit processes and expedite compliance using tools like our PCI Dashboard.

- Cost-effective data storage that maintains attestations, such as SOC2 Type II, HIPAA, PCI Service Level 1 Provider, and a FedRAMP moderate authorized offering.

- Real-time monitoring of incoming data and security controls to identify anomalies that could signal a security risk, cyber threats, vulnerability, security threat or non-compliance.

Numerous data integrations and out-of-the-box applications that properly collect and catalog all data.