Sumo Logic ahead of the pack

Read articleComplete visibility for DevSecOps

Reduce downtime and move from reactive to proactive monitoring.

September 3, 2014

Docker is incredibly popular right now and is changing the way sysadmins, developers and engineers go about their day to day lives. This walkthrough isn’t going to introduce you to Docker, I’ll assume that you know what it is and how to use it. If not, don’t worry, here is a great place to start: What is Docker?

With Sumo Logic’s firm entrenchment in the DevOps and cloud culture, it’s no surprise that a large number of our customers are utilizing Docker. Some are already pushing their Docker related logs and machine data to Sumo Logic, others have reached out with some interesting use cases for guidance and best practices to getting their valuable machine data into Sumo Logic. This post will walk you through a few scenarios which will ultimately result in your machine data in one centralized location for troubleshooting and monitoring.

Four (OK, Five) Ways to Push Your Logs from Docker into Sumo Logic

Install a Sumo Logic Collector per Container

Before the Docker purists jump all over this one, let me clarify that I’m fully aware that this method may invoke some heated debate. If you are a one application/service and one application/service only per container individual, then skip to the next method. Otherwise, thanks for sticking around.

In this approach, we will install an ephemeral collector as part of a container build, deploy a sumo.conf file to provide authentication (and other information) and optionally, deploy a sources.json file to provide paths to log files.

Here is an example Dockerfile to accomplish this task. Note, I deployed MongoDB as an example application, but this could represent any application, just modify accordingly.

# Sumo Logic docker # VERSION 0.3 # Adjusted to follow approach outlined here: # http://paislee.io/how-to-add-sumologic-to-a-dockerized-app/ FROM phusion/baseimage:latest MAINTAINER Dwayne Hoover ADD https://collectors.sumologic.com/rest/download/deb/64 /tmp/collector.deb ADD sumo.conf /etc/sumo.conf # ADD sources.json /etc/sources.json # ensure that the collector gets started when you launch ADD start_sumo /etc/my_init.d/start_sumo RUN chmod 755 /etc/my_init.d/start_sumo # this start_sumo file should look something like this (minus the comments) # #!/bin/bash # service collector start # install deb RUN dpkg -i /tmp/collector.deb # let’s install something # put your own application here RUN apt-key adv –keyserver hkp://keyserver.ubuntu.com:80 –recv 7F0CEB10 RUN echo ‘deb http://downloads-distro.mongodb.org/repo/ubuntu-upstart dist 10gen’ | tee /etc/apt/sources.list.d/mongodb.list RUN apt-get update RUN apt-get install -y -q mongodb-org # Create the MongoDB data directory RUN mkdir -p /data/db EXPOSE 27017 # ensure that mongodb gets started when you launch ADD start_mongo /etc/my_init.d/start_mongo RUN chmod 755 /etc/my_init.d/start_mongo CMD /sbin/my_init

It’s worth noting that the container will start the services specified in the scripts deployed to your /etc/my_init.d directory by issuing the /sbin/my_init command at container launch. Unless specified, logs will go straight to STDOUT and will not be available via path from the Sumo Logic UI using the collector that you just installed.

Simply add the additional paths that you are interested in gathering machine data from and it will flow into your Sumo Logic account while the container is running.

Collect Local JSON Logs From the Docker Host

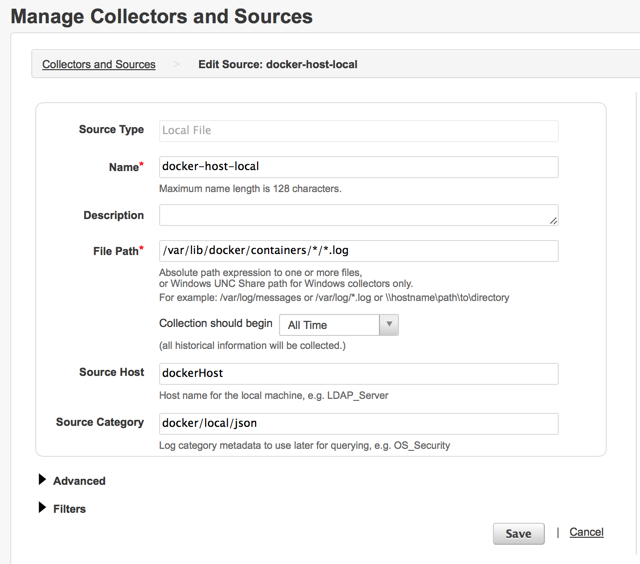

If you would like to see all of the log data from all of your containers, it’s as easy as installing a collector on the Docker host and pointing it to the directory containing the container logs.

Once installed and registered, point it to the Docker logs:

The default path for the docker logs are in: /var/lib/docker/containers/[container id]/[container-id]-json.log

These logs are in JSON format and are easily parsed using our anchor based parse or JSON operator. For more information, see the following:

/01-Parse-Predictable-Patterns-Using-an-Anchor

/03-Parse-JSON-Formatted-Logs

Stream the Live Logs From a Container to a Sumo Logic HTTP Endpoint

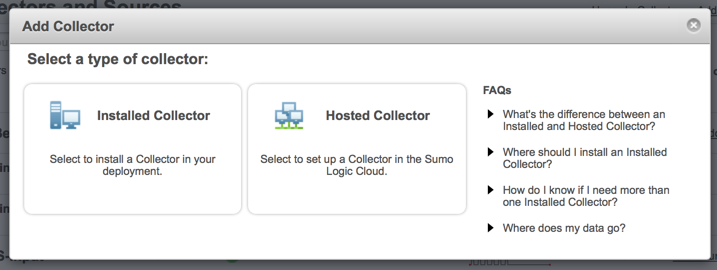

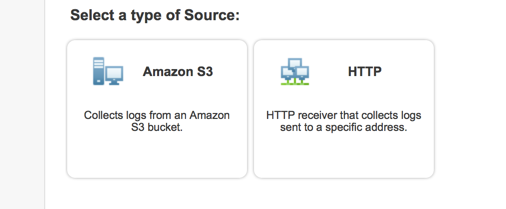

If you don’t want to install a collector, you can use a “Hosted Collector” and push your log messages from a container to your unique HTTP endpoint. This is done on the Docker host and utilizes the “docker logs” command.

First, configure a hosted collector with a HTTP Source:

/Configure-a-Hosted-Collector

/HTTP-Source

The basic steps here are to choose “Add Collector” then “Hosted Collector” and choose “Add Source” selecting HTTP. Once the source metadata is entered, you will be provided a unique URL that can accept incoming data via HTTP POST. Scripting a basic wrapper for CURL will do the trick. Here is an example:

#!/bin/bash URL="https://collectors.sumologic.com/receiver/v1/http/[your unique URL]" while read data; do curl –data “$data” $URL done

Edit the URL variable to match the unique URL you obtained when setting up the HTTP source.

Using the docker logs command, you can follow the STDOUT data that your container is generating. Assuming that we named our curl wrapper “watch-docker.sh” and we are running a container named “mongo_instance_002” we could issue a command like:

nohup sh -c 'docker logs --follow=true --timestamps=true mongo_instance_002 | ./watch-docker.sh' &

While the container is running, its output will be sent directly to Sumo Logic. Note that “follow” is set to true. This ensures that we are collecting a live stream of the STDOUT data from the container. I’ve also chosen to turn on timestamps so that Sumo Logic will use the timestamp from the docker logs command when the data is indexed.

For more information on docker logs, go here.

Bind Your Containers to a Volume on the Host and Collect There

Docker provides the ability to mount a volume on the host to the container. Without getting into too much detail about how to achieve this (different methods may be applicable for your needs), check out the Docker documentation.

Assuming that your dockerized applications are logging to a predefined directory and that directory is mounted to the host filesystem, you can install a Sumo Logic collector on your Docker host and create a new source that monitors the shared volume that you are logging to.

There are some concerns that this could open up a potential attack vector if your container is compromised, so please ensure that the necessary security measures have been put into place. For internal use cases on an already secured environment, this is likely a non-factor.

*BONUS* Install a Collector Running in a “Helper” Container

Borrowing from Caleb Sotelo’s post it’s possible to stick to the “one process, one container” mentality, and run a Sumo Logic collector within its own Docker container.

You can create a container that only contains a Sumo Logic collector. Assuming that this container has the ability to see other containers in your Docker environment, you can configure data sources to pull logs from other containers. This can be done over SSH using remote sources in Sumo Logic or by utilizing shared volumes and using Local File sources.

Setting up remote sources requires SSH access either via a shared key or username/password combo. Here is some additional detail on setting up remote sources.

Please note, this is dependent upon the configuration of your Docker environment supporting communication between containers via SSH or shared volumes and is a bit out of scope for this walkthrough. For some additional information, check these out:

Reduce downtime and move from reactive to proactive monitoring.

Build, run, and secure modern applications and cloud infrastructures.

Start free trial